This paper examines the cultural counter-flow between ethnography and remote usability testing, specifically what such tools might offer ethnographic practice. I explore how remote usability testing can both extend and delimit ethnographers’ sight lines. Because remote testing has a narrow aperture, long sight line, poor context and quick turnaround, I invoke the metaphor of a spyglass in the hands of the ethnographer to understand this increasingly available digital research method. Remote usability testing can quickly access insights and novel footings, while simultaneously creating myopic, distorted or biased understandings. Theoretically, the history of usability studies is compared to that of archaeology as it transitioned from a cultural product focus to a context focus. Practically, several workflows are presented that use the strengths of ethnography and remote usability testing to enhance one another. Finally, ethnography is discussed as a craft-like competence, rather than a method, that crosses increasingly diverse methodological terrain.

Keywords: Design Ethnography, Usability Studies, Methodology

INTRODUCTION

Studying users and their experiences has become a central consideration of digital product development. To this end, digital departments often house social scientists with an expertise in seeking to understand the ways in which people use technological products. Within this environment, claims to this expertise have come from various social science disciplines, each bringing the strengths of their disciplines’ epistemologies and methodologies along with attendant caveats. In the case of Anthropology and ethnography, this has often meant entering as an outsider to a context already inhabited by Psychology, Human-Computer Interaction, Usability Studies, Design and Engineering. While EPIC has been a venue for furthering the value of ethnography in such contexts or the implications of having ethnographic methods practiced by those without extended training or fieldwork experience, the EPIC community has devoted less attention to the flow of ideas, methods and epistemologies in reverse—from the digital sciences into ethnography. When this has been addressed, the view has been negative, suggesting that UX has coopted ethnography (Amirebrahimi 2016, p. 87). This paper will examine various dimensions of this cultural counter-flow. What is the value of usability studies to the ethnographic community? What possibilities and limitations emerge when we consider such disciplinary hybridities? Moreover, how are industry tools, specifically remote usability testing (for the sake of this analysis), potentially extending and delimiting ethnography’s sight lines? Does an ethnographic stance have the ability to re-imagine the place of usability testing in the course of digital product development?

Viewing the Field from Afar

In his recent book, Sensemaking, Christian Madsbjerg (2017, p. 93) encourages those in business to eschew abstract analysis in favor of a kind of phenomenological, in-context research that reveals social actors in their rich, lived experiences. To illustrate, he contrasts the behavior of lions at a zoo—a contrived context—to lions’ behaviors on the African savannah. He exhorts the reader, “Instead of watching lions eating food from a bowl in a cage, go out and observe them hunting on the savannah. Escape the zoo.” (p. 96).

Indeed, there is no replacement for escaping the zoo in recognition that it is a synthetic environment that leads to limited insights about the behavior of the real deal in real context. Similarly, there is no replacement for researchers doing field research in order to gather rich data on their subjects in their context—the proverbial savannah to use Madsjberg’s term. From a business perspective, however, such “safaris” are expensive and time-consuming even if institutions are aware of the insight they provide. What would our lion researchers, studying the behavior of lions gain from looking in on their subjects at strategic points using train cameras, for instance? While not fieldwork sensu stricto, this activity would certainly yield a useful sightline into the lives of lions in their habitats that would both corroborate and extend insights gained from observational fieldwork. Similarly, I argue that usability tools, specifically remote usability testing, have a valuable and unique place in the ethnographer’s toolbox as ways of observing humans in their context and gathering contextual feedback quickly.

Usability Testing Comes of Age

Research addressing digital technologies’ ease of use is as old as digital technology itself. Early in the development of graphic user interfaces and computation, research was conducted by the technologists to validate and iterate on technological prototypes. Eventually as digital technology moved out of the lab and became more widely adopted (Bødker 2006), usability testing techniques largely remained laboratory-based.

In laboratory studies, “users” from a target demographic would be brought on-site where researchers would give tasks, note observations and probe for users’ underlying motivations (Baecker and Buxton 1987). Labs were set up with accoutrement to measure key indicators, such as time-on-task, perceived ease of use, and others to assess the “usability” of the experimental technology in question. The contextual bias of such a testing agenda is apparent to most ethnographers: the development of the methodology, the design of the lab space and the unnatural, unfamiliar technology limit labs’ ability to predict technology use patterns once it is released outside the laboratory setting. Speaking for many ethnographers, Ladner (2014) pointed out that such places can never be sites for ethnographic research “because the need to understand a prototype’s usability crowds out the ethnographic agenda” (p. 187). Ethnography, since the days that anthropologists disembarked from their armchairs and began stranding themselves in exotic locales, has required a particularistic being-in-place that allowed the researcher to meet subjects in their context. This is commonly thought to assist in creating an emic research perspective, one that seeks to understand how local people perceive and categorize the world, the logic behind their behaviors behavior, what has meaning for them, and how they perceive matters (Kottak 2006). To be ethnographic is to be emic.

By contrast, laboratory usability studies are the domain of usability researchers and technologists. Research subjects are removed from their daily routine and brought to a place where their behaviors—if not their individual persons—are the grist for a study focused on a piece of technology. The focus, the place, the terms of discourse among researchers all reveal the usability lab as a fundamentally etic location. Thus usability studies, for both focus and location, are distinct from, and antithetical to, ethnography. Indeed, the contextual shortcomings of lab-based usability testing contributed to a data vacuum in the digital technology qualitative research sector that has encouraged ethnography to advance in a complementary manner.

In order to address the shortcomings of lab-based usability research, a number of platforms have emerged for conducting usability research more quickly, in less contrived settings. Remote usability research platforms like Usertesting.com, UserZoom, Lookback, dscout, Userbrain and Morae offer researchers the opportunity to easily recruit participants of specific ages, genders, and socioeconomic groups to participate in studies that the research subjects complete in their own homes, in a café, or while walking down the street.

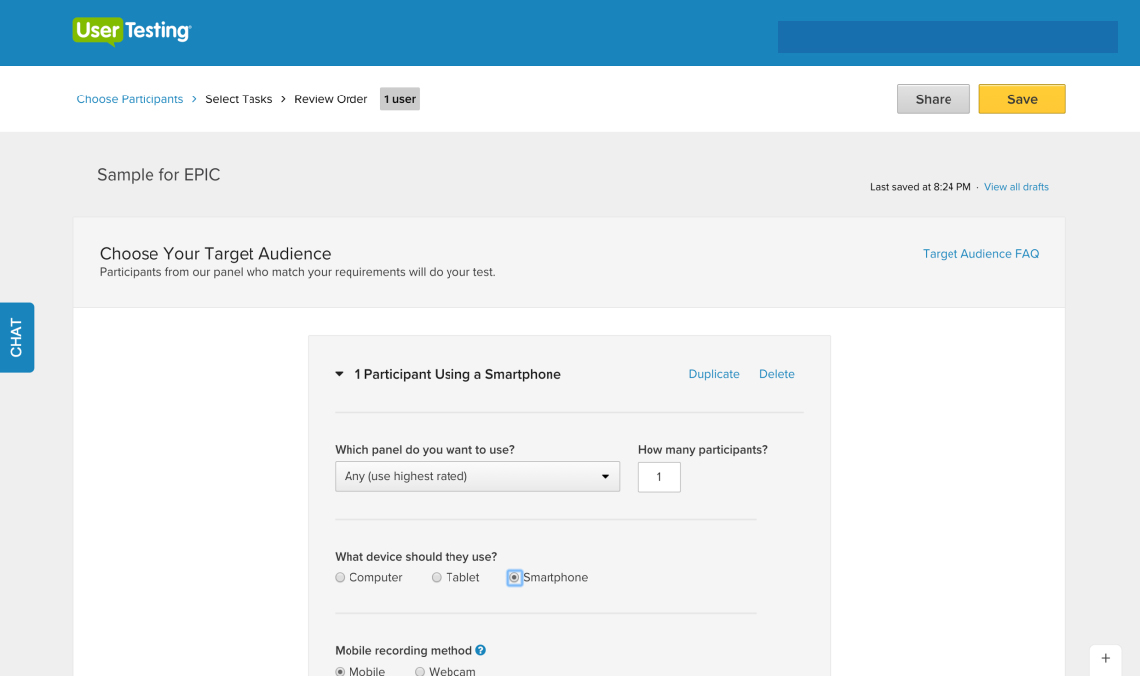

As an example of a typical un-proctored remote usability test, take the protocol for employing usertesting.com for a usability study. The researcher defines the scope and aims of the study, while crafting a series of questions and tasks for the participant. She specifies the age, gender, income range and nationality of the participant to be recruited.

Figure 1: Usertesting.com allows researchers to select participants based on gender, operating system, screen stereotype, age and income. Screenshot taken by author.

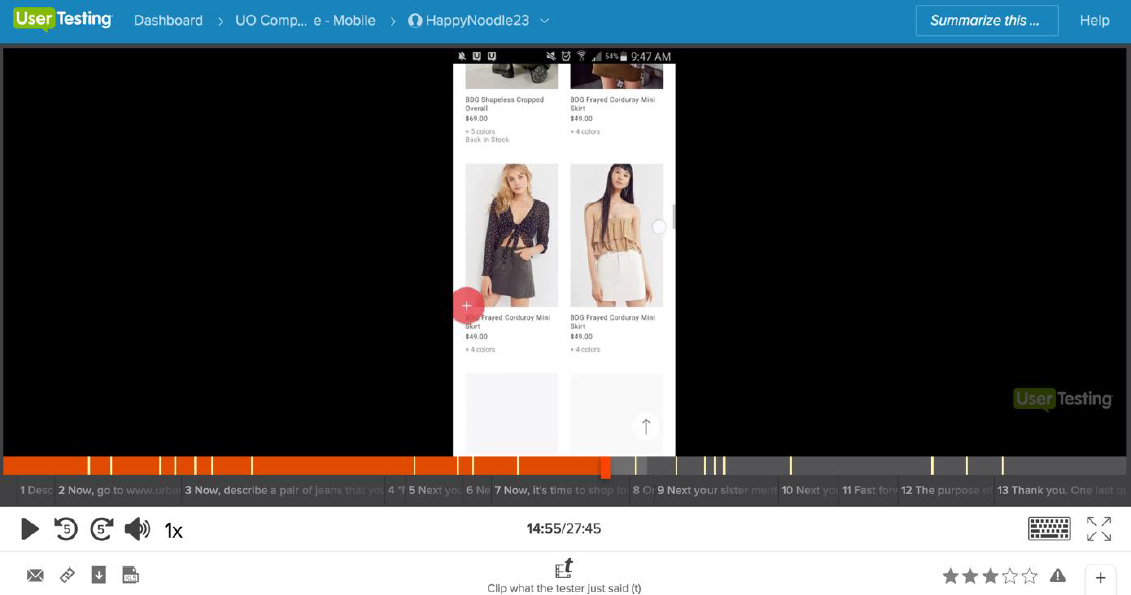

When she “runs” the test via usertesting.com’s website, a request for participation is sent to appropriate “testers” from a pre-recruited panel of participants. If these participants are currently free to complete a 15-min block of study participation, they accept the request. Upon accepting, they are sequentially introduced to the questions and tasks pre-determined by the researcher. An audio-video recording of the interactions is then uploaded to usertesting.com’s portal to be watched later by the UX researcher. In a proctored usability study, the protocol is much the same, though a time must be specified for the researcher and participant to virtually meet for a session that is facilitated by the researcher.

Figure 2: An audiovisual recording of the participants’ interactions is available for annotation and editing. Screenshot taken by author.

These methods of usability testing hold considerable advantages over traditional lab-based usability testing. First, recruiting is streamlined and study turnaround times are greatly compressed. Second, the effect of the physical environment—an unfamiliar place that is outside of the participant’s typical routine—is minimized. Third, affirmation bias derived from a participant’s desire to please the researcher is reduced in un-proctored testing by an anonymous recruitment process through the intermediary platform (provided that branding, and first person language are removed from the testing script and prototype). Fourth, remote usability testing obviates the need to build, maintain or lease expensive facilities. Taken together, these advantages have reduced the costs for conducting usability research on digital products, while simultaneously enhancing research’s ROI by lowering up-front cost.

It is not surprising then, that industry trends indicate greater private sector use of remote usability testing platforms. Since its inception in 2013, usertesting.com’s industry survey has indicated year on year trends toward greater spending and more frequent testing (Usertesting.com Accessed September 15, 2017). The ubiquity of usability studies is demonstrated from the market penetration statistics offered on two of the largest service sites. Usertesting.com reports that more than 34,000 customers use their platform, including the top 10 web properties (https://www.usertesting.com/ who Accessed September 15, 2017). Similarly, User Zoom reports that since 2007, over 28,000 usability studies involving more than 3,000,000 participants have run through its platform (https://www.userzoom.com/ Accessed September 15, 2017). There is a clear trend of companies seeking quick, qualitative insights from remote usability testing.

COLLISIONS OF METHOD AND EPISTEMOLOGY

The observant reader may have noticed that both ethnography and remote usability testing have evolved to address the shortcomings of etic, lab-based testing. This move toward more robust usability research practices has coincided with a greater number of ethnographers finding employment in the private sector rather than the academy. I am representative of both of these trends: an anthropologist-ethnographer by training who regularly conducts remote usability testing in a private sector setting.

From my position as an ethnographer and UX researcher, I have observed evolving tensions of epistemology, methods and timelines. Epistemologically and methodologically, usability testing conducts research that appeals to instrumental rationalism time (Madjsberg and Rasmussen 2014, p. 26) and boasts a quick turn-around. By contrast, ethnography utilizes an abductive research approach that emphasizes depth, unstructured observation and context over rapidity or product-specific results. Many of these characteristics are difficult to sell to certain instrumental rationalists because of its unfamiliarity—it feels to their sensibilities more like aesthetics than analysis.

At the same time, the perceived value of contextual research has been increasing (Bødker 2006). Tech anthropologists are featured in the (Singer 2014). Members at EPIC are well aware of this trend: ethnography is valuable for understanding the meaning of a putative solution in its context. In response to the valorization of context, usability studies have gone remote. What does this mean for ethnographers? I contend that it means both polarization and hybridity.

Polarization is a predictable reaction when two approaches offer competing solutions to a problem. The strengths and weaknesses of each are brought into relief as the approaches prove their relative values. But hybridity also occurs when the approaches are co-located within teams, or even within individual practitioners seeking to understand digital products/services within their contexts. In the case of remote usability testing and ethnography, both may co-exist within the team at a given corporation and within each of the practitioners. There is reciprocal counter-flow embedded in such a hybrid practice: researchers conducting both kinds of research will natural borrow techniques from each practice. Ethnography cannot be usabilified without usability being ethnographized. This got me thinking: how does the logic of usability studies affect the ethnography I do? Is my ethnographic imagination somehow at risk of dilution? What is at stake if it is? This is the danger of hybridity—its impurity (Douglas 1969). So then it should be asked, what can remote usability testing lend to ethnographic practice? Is it possible for remote usability testing to be ethnographized—that is used by an ethnographer to further an emic understanding of study population? I argue that it can be.

In fact, ethnography has been used previously to bridge the present—both in the temporal sense and the spatial sense—in archaeology, another discipline that often must draw conclusions based upon fragmentary data. Lessons from the gradual adoption of ethnography into archaeological practice, and the reciprocal incorporation of greater diachronic depth (addressing a critique of early ethnography) offers parallels for understanding hybrid ethnography+usability praxis.

ARCHAEOLOGIZING REMOTE TESTING: TOWARD ETHNO-USABILITY

Archaeology is a social science often tasked—as are remote usability studies—with formulating explanations from fragmentary data, distant research subjects and incomplete contexts. Its evolution over the second half of the 20th century parallels several trends affecting usability studies. Thus, it may offer insights into the process of ethnographizing a previously stenographic discipline. In 1958, Gordon Willey and Philip Phillips weighed in on the importance of cultural interpretation in archaeology. “American archaeology is anthropology or it is nothing,” they said (Willey and Phillips 1958, p. 2). In this statement, they asserted that the goals of archaeology overlapped with the goals of anthropology—which is to answer questions about humans’ social relations. Their statement contributed to the processual archaeological movement, which represented a break from the prevailing cultural-historical school of thought. This schism was important because of resistance to the cultural-historical idea that any insight that artifacts retained about historical people and their ways of life were lost as the artifacts became part of the archaeological record (Wylie 2002). The next generations of archaeologists would attempt to understand the material past in context. In short, the emphasis shifted from the artifacts to the people who were using the artifacts. Shifting this emphasis, however, came with theoretical and methodological challenges due to the often fragmentary, incomplete nature of the archaeological record. Wylie elaborates the conundrum in which archaeologists found themselves:

Archaeologists seem trapped: either they must limit themselves to a kind of “artifact physics” (DeBoer and Lathrap 1979: 103), venturing little beyond description of the contents of the archaeological record, or, if committed to anthropological goals, they must be prepared to engage in the construction of just-so stories as the only means available for drawing interpretative conclusions about the cultural past.

Facing incomplete data contained in the archaeological record, the anthropological aspiration of archaeology seemed to rest uneasily either upon unimportant, obvious trivia or paradigm-informed speculation (i.e., an interpretive dilemma, Wylie 2002, p. 117). Similarly, practitioners tasked with gleaning customer insights solely through remote usability methods face a similar dialectic—report either rote empirical findings with little interpretation (a kind of usability physics championed by those who advocate quantification of usability), or fabricate a theoretical architecture from 3rd party sources (e.g., market reports, competitive audit) to allow for more informed analysis.

The shift toward studying context of use versus strict usability poses similar theoretical and methodological challenges for usability researchers as the transition toward interpretation pushed the boundaries of product-centered research protocols. Early forms of usability testing shared the cultural-historical school’s emphasis on artifacts over social interactions. The beliefs, feelings, and social life of test subjects were considered somewhat beyond the scope of technology testing. I argue here that, similar to mid-20th century archaeology, usability testing must be ethnographic or it is nothing.

If this is the case, usability studies might look to the development of archaeology over the course of the 20th century and incorporate similar techniques to address limitations of aperture and depth. The processual and interpretive (post-processual) archaeologists compensated for the dearth of complete material evidence of the archaeological record with two broad strategies intended to reduce uncertainty: alliances with positivist techniques and incorporate of ethnography. As processual archaeology developed during the mid to late 20th century, there came to be an emphasis on hypothesis-driven, experimental and quantitative methods to help archaeologists engage in the interpretation of past cultures with less risk of bias and speculation (Wylie 2002, p. 62-64). This work, spearheaded by Lewis Binford, was, in today’s terminology, an attempt at a ‘data driven’ archaeology that, if the right experimental design was applied, would produce durable insights into the past.

After some consternation stemming from the difficulties arising from extreme adherence to positivist principles, post-processual archaeology emerged with a greater focus on interpretation of the past and the past’s role in the present. I see an analog with the current moment in usability research: positivist alliances have too often added less value to businesses—which are implicitly anthropological goals whether that is made explicit or not—as big data’s insights are less robust than anticipated. Hence, we can observe a convergence in usability studies that is analogous to the second trend that archaeology experienced—contextualization through ethnography. Ethnoarchaeology, the use of ethnography to inform archaeological study, was developed to facilitate extrapolative interpretation of artifacts by learning about social contexts that might resemble the social contexts that bore the artifacts and sites being studied (David and Kramer 2001). This approach proved to be a valuable grounding for archaeology as it transitioned away from pure materialist description. Ethnography became inserted within archaeological practice to provide greater context, while anthropology gained greater reach and time-depth through archaeological and historical studies. Will a similar process bind together usability studies and ethnography? If so, ethnography will gain according to usability’s strengths, and vice versa. This theoretical-methodological construct contributes value when considering the possibilities and shortcomings of ethnographized usability.

THE ETHNOGRAPHER’S SPYGLASS: POSSIBILITIES AND LIMITS OF ETHNO-USABILITY

What possibilities are created by the prospect of ethno-usability? Traditional ethnography has a wide aperture, human-scale sight line, thick context and results that are delayed by time necessary for analysis. By contrast, remote usability testing has a narrow aperture, long sight line, imperfect context but near instantaneous results. The complementarity is apparent. To understand the prospects and perils of ethnography+usability testing, let’s explore the metaphor of a spyglass in the hands of the ethnographer.

Opportunities of Reach and Footing

The ethnographer’s spyglass has the obvious advantage of being able to conduct research and deliver results at great distance, nearly instantly. While traditional ethnographic research methods require travel to the site, lengthy (at least to the sensibilities of some business stakeholders) stays onsite, and time to synthesize findings, remote usability testing offers results to pointed questions within hours. At the same time, ethnography has grappled with the question of reach through the development of multi-sited ethnography (Marcus 1986), p. 171-173). While multi-sited ethnography addresses some problems of reach, it is prohibitively time and travel intensive in corporate contexts. In this case, remote usability testing allows the ethnographer to follow threads through other field sites after familiarization with the intended study population. Moreover, remote usability testing can provide a medium of communication to continue to build on a research foundation built through traditional, in-context ethnography. The question that I wish I’d asked a key participant, but didn’t know would be important? If remote testing is arranged in the research plan from the outset of the study, some remote testing platforms allow for a particular person to be re-contacted to interact with digital prototypes during later development stages. This ability to quickly and inexpensively reconnect with the field—either through recruiting more people of an intended demographic, or by reaching back to participants with whom rapport has been previously built—is immensely valuable as products iterate and requirements evolve. To be able to quickly fill in the gaps of an established ethnographic viewpoint of a population is tremendously valuable mitigating risk and retaining stakeholder buy-in.

Less obvious but equally valuable is remote usability testing’s ability to create novel footings for the researcher, participants and stakeholders. Erving Goffman introduced the concept of footing, describing it partially as occurring when a “participant’s alignment, per se, or stance, or posture, or projected self is somehow at issue.”(Goffman 1981, p. 128) Footings can change as an interaction progresses. For instance, Goffman goes on to elaborate that two people might begin with an initial footing based on previous knowledge of one another, expectations, clothing or greetings, and then evolve those footings through the course of a conversation based on all of the signs and signals that are exchanged and interpreted during the interaction. In the case of ethnographic research, issues of footing occur regularly, ranging from the power asymmetry of researcher and participant to the privileged access that gender can confer upon certain researchers investigating particular topics. Lacking a physical presence, institutional affiliation, or even further probes or responses to initiate the cascade of subsequent footings, the pre-determined scripts of remote unproctored usability testing serve to turn the dynamic of the discussion toward familiar monologue. For example, if I conduct research for a brand selling intimate clothing, the anonymity and altered footings afforded by the usability testing platforms give me privileged access that my embodied presence as a male 30-something researcher would not allow in a usual ethnographic setting (granted, the data from in-person ethnography with a trusted researcher would lead to rich data of a different sort. I am not suggesting that the remote testing replaces such knowledge, but that I gained access to knowledge I did not have before through the remote testing platform).

Rather than leveraging participant-research rapport as ethnography has traditionally done, remote testing studies can avoid rupturing an emic stance by leveraging the participant’s relationship with her smartphone. Many research participants, especially those on the panels provided by remote usability testing services, have developed a deep relationship with the smartphone. For certain populations, this attachment leans toward cyborg-like blurred boundaries between person and machine. Because of the preexisting intimacy with smartphones, the researcher conducting a remote usability test has the benefit of immediately being disembodied, transferred and reanimated as a series of questions displayed on the screen of a familiar device. This gives the remote researcher the advantage that comes from protecting the fabric of daily life.

Furthermore, remote usability testing has the opportunity to deepen a corporate ethnographer’s understanding of certain digital offerings by collecting data in the field during micro-moments. A micro-moment is “an intent-rich moment where a person turns to a device to act on a need-to know, go, do or buy” (Adams et. al. 2015). For researchers concerned with the role of digital products and services in their participants’ lives, using remote testing to allow participants to participate while they are at a café, on the couch or walking down the street gives insight into the way that usability matters in the field where multiple stimuli are competing for users’ attention across time and apps. For example, when an online retailer conducts usability testing of various site features, remote usability tests are deployed to participants who are at home, in their dorm, or in a café. Researchers can choose to start their research in mid-shopping task. By testing in this way, researchers are able to recreate and evaluate the semiotics of orientation, both online and real-world, that are so essential for studying micro-moments but are more difficult to access through standard analog diary studies (SMS-based diary studies do not suffer from this shortfall) that can remove participants from the multitasking mind-space of their smartphones.

The immediacy and reach of remote usability testing tools can assist in gathering preliminary findings. Research participants can be asked to use the camera function on their phones to perform activities commonly associated with in-person ethnography. Participants might be asked to give a tour of their closet, show where voice UI devices are in their homes, analyze the contents of a purse or wallet, or describe in detail the last hour of social interactions they had. Used in this way, remote usability tools can serve to test the assumptions of larger scale ethnographic assignments, thus reducing the costs and risks of a complete research project.

The audiovisual artifacts that are created during the remote usability session also offer executive stakeholders a different kind of access to their customers. Executives or other stakeholders who are accustomed to learning about customer trends through quantitative abstraction are often compelled to re-think a decision after being presented with a video montage of remote usability clips of customers, in their own words, dispelling persistent yet incorrect assumptions held by stakeholders. Opportunities of reach, in this case, extend to the influence that ethnographer-researchers might have by giving stakeholders more visibility into the daily lives of people.

Limits of Aperture and Depth

While remote usability testing is a robust tool, like most research methods, it has limits beyond which distorted results are likely. Not only is it limited, but remote usability testing also has considerable potential to be abused, so its shortcomings should be at least as well understood as the possibilities it creates. Its potential for abuse stems from the allure of the empirical. For those who do not fully understand the importance of context, thick description, etc. that ethnographers understand, the value of ethnography is in its obvious empiricism. Ethnography is highly empirical in that it is grounded in observation and experience rather than theory or pure logic. If ethnography is employed within an organization primarily for its empiricism, and not its insights, there may be temptation to use remote usability testing as an empirical, qualitative stand-in for ethnography.

While remote testing is empirical, its narrow aperture severely delimits the explanatory value of its results without additional contextualization. To extend the spyglass metaphor quite literally, imagine yourself in a high-rise office building using a spyglass to observe people crowding around a newsstand several stories down and across the street. You see that people are jostling, and working around one another for space. They shift, lines form and dissipate. The customers’ movements are irritated and brisk. You see most of them are men. The vendor at the newsstand is gesturing at the small crowd, seemingly to indicate that the desirable item is no longer available. You strain to read lips, scanning up and down the street looking for someone who bought the item in question before it ran out. Empirically, you know that demand for a particular product was exceeded. But why? What was it? Where are the other patrons? What did you miss? While what you saw are important for understanding what happened on the street today—and you surely saw more than you would have by keeping your attention within the office—you don’t know what you were unable to see because of the spyglass’s narrow aperture. Similarly, remote usability used without other research methods, like ethnography, data/analytics or surveys, to inform broader contexts is liable to provide limited insights that are both shallow, and open to interpretation that is fraught with confirmation biases.

Remote Usability testing is not “Thick” (Geertz 1973) for at least two important reasons. First, its shallow nature must be remembered when tasks or tests attempt to establish personal, contextual parameters such as value, importance or urgency. 15 minute testing slots where participants are rewarded for completing tasks create an incentive structure that undermines its ability to predict urgent, contextual or habitual behavior because those temporal guide rails are artificially pre-determined. The lack of temporal modulation of testing—a feature of long-term observational research—undermines the researcher’s ability to judge how much a person cares about the digital product involved or the task at hand. Second, usability testing may be able to integrate a new participant’s data into a known knowledge base but there is generally not time to collect sufficient biographical information to place the participant in her individual context. Taken together, these shortcoming severely delimit the ability of data gathered via remote usability testing to stand without contextual data of other kinds corroborating analysis.

PRACTICE AND POSSIBILITIES: ETHNOGRAPHY, USABILITY AND BUSINESS WORKFLOWS

Given the strengths and weaknesses associated with remote usability testing, where might it fall within typical product development workflows? The key to creating a research plan in which the overall methodology is stronger than the sum of the constituent methods (Leedy and Downes-Le Guin 2006) is oscillating between usability testing and ethnography as needed to ensure a recipe with the right balance and order to create a product primarily composed of synergistic strengths.

Standard Project Work Flow: Ethnography → Remote Usability Testing → Product Release

This workflow positions ethnography as the exploratory task, and remote usability as confirmatory. It is the most straightforward process for using the best of ethnography’s depth and context gathering to delimit the two primary weaknesses of remote usability test—aperture and depth. At the same time, it takes advantage of remote usability testing’s ability to reach back to the field quickly to gather insights. This workflow may leave valuable data undiscovered by failing to deploy remote usability testing in the early stages to contact more field sites or explore novel footings with participants.

Experimental Work Flow 1: Remote “Usability” Testing → Ethnography → Remote Usability Testing→ Product Release

In contrast to the standard workflow detailed above, the first experimental workflow places a round of remote usability testing (not truly usability testing, per se, because its primary concern is ethnographic reconnaissance rather than product-centric insights) prior to standard ethnographic engagement. This might be useful for consultancies or low-budget projects seeking to maximize every moment of work in the field. In this case, remote usability testing is leveraged for its reach/cost-effectiveness. Its shortcomings are smoothed over in the next stage of data gathering. After traditional ethnographic engagement, remote testing is again used to re-contact the field and validate the product in question.

Experimental Work Flow 2: Remote “Usability” Testing/ Ethnography → Remote Usability Testing→ Product Release

This workflow is the most experimental. It features using remote usability testing from the field to introduce the creative tension of multiple field sites early in the analysis. This opens up possibilities for a greater range of coincidences and contrasts. For example, if you are conducting a project on the adoption of mobile phones in Argentina, set up a primary ethnographic site in a provincial town with access to a rural area, while simultaneously conducting remote usability tests with participants in Argentina’s urban center, Buenos Aires. Remote testing is used again to re-contact the field and confirm an approach. As a point of strength, pursuing Experimental Workflow 2 makes use of all of the positive aspects of both methods, leading to a multi-faceted, rich picture of the subject matter. This approach has downsides too. First, conducting research in multiple fields can tax a small research team or prolong their time on the road. Second, rich, sometimes contradictory data may accurately convey the complexity of the real world, but also may lengthen the time necessary for analysis. Moreover, it can cause stakeholder buy in to be difficult if different people use contradictory data to retrench pre-existing agendas and cause gridlock.

ETHNOGRAPHY AS COMPETENCY, NOT METHODOLOGY

Warning! For Use Only by Experienced Ethnographers

Looking at the possible workflows above, you might notice that I focus on ethnographers doing remote usability testing, rather than usability analysts doing ethnography. I focused this way in part because of the audience, but also because of an embodied, craft-like component that I argue allows remote usability testing to approach the ethnographic. Remote usability testing is appropriate for ethnographic use only by those familiar with qualitative research praxis who therefore understand the possibilities, limitations and distortions that may arise from the use or abuse of remote usability research. Ethnography is an embodied practice—the disposition and training of the practitioner have tremendous influence over the research product (Jones 2006) in a way that is difficult to scale or industrialize (Lombardi 2009).

While certain tools and methodologies, such as on-site usability labs, analytics etc., are antithetical to the central, emic proposition of ethnography, remote usability testing is not necessarily antithetical. It constitutes an advanced tool for those with a depth of ethnographic praxis for refining insights and predicting behavior patterns that are relevant to design artifacts which may not yet exist in the open market. EPIC 2016 discussed the possibilities and limitations of democratizing ethnographic praxis beyond expert ethnographers. But how can one sense what one cannot see? That is the responsibility of the ethno-usability researcher, tasked with cobbling together a multi-sited ethnography in which some of the sites were remotely accessed. In the absence of a skilled ethnographer working with a known population, these limitations increase the likelihood that the research will represent distorted understandings of users and/or their contexts. Usability testing of all sorts – proctored/un-proctored; remote/in-person – are prone to confirmation bias when conducted by un-skilled researchers. Perhaps, ethnography is not an external behavior we exhibit, but an aesthetic competency that we can hone and bring to bear using various methods.

What might “count” as ethnography is the ontological debate of the EPIC community. An exploration of the potential for remote usability testing to be ethnographized revolves around ethnographic expertise, rather than any particular method itself. As traditional single-site ethnography expanded to multi-sited ethnography and eventually digital ethnography, standard conceptions of the methods and fields of ethnography have also concomitantly shifted. This shift has exposed ethnography as a competency rather than a methodology. Ethnography is an embodied practice, a stance toward what matters in social life, what should be noticed, what can be forgotten, and what to look for next. Democratizing ethnography exposes it as more of an art than a methodology that can be applied. In a review field-site ethnography, Tom Hoy brings attention to Jan Chipchase’s remark, “Anyone can start conversation and ask questions, but it takes years of experience to become proficient in guiding but not leading an interview” (Chipchase and Phillips 2017). Reviewer Tom Hoy states that this is “a clever move: opening-up the practice to everyone, while simultaneously revealing the skill and complexity of doing it well” (Hoy 2017). This is the crux of incorporating insights from remote usability testing without compromising ethnographic quality—the craftsmanship of skilled ethnographers. How that might be defined, perhaps even as a professional certification (Ensworth 2012), continues to be a matter of debate.

The experience of different ethnographic competencies using the same remote usability testing methodology was recently made during a review of a junior team member’s test annotations—essentially the usability testing equivalent of field notes. For a test that I had noted, “The participant sighs and scrolls, each swipe longer than the last. She says nothing, but I can almost feel her clutching the phone, confused either with our questions but more probably because the navigation elements do not align with her mental models… Her breathing is different from the beginning.” By contrast, the junior colleague noted “Participant scrolls all the way down. Can’t find desired product.” The difference in seeing, selectively ignoring, listening and intuiting are the results of an ethnographer executing and evaluating a usability test. Is remote usability testing Ethnography? No. Can it be ethnographic? It depends on who is doing it.

DIRECTIONS

In this examination of cultural counter-flow, I’ve asserted that ethnographic practice could benefit from remote usability to answer certain questions at certain times, particularly to help minimize some of its drawbacks.

The convergence of ethnography and usability appears to be occurring at an accelerated rate. Take this paper’s writing as a case in point. When I submitted the abstract, the remote usability platform I most frequently use, usertesting.com, did not have the capability to recruit for moderated testing or teleconference-style sessions. Just in late-summer, they have now launched their offering called Live Conversation. Live Conversation allows researchers to leverage their platform to help with scheduling and recruiting participants for conversations that can last as long as the researcher desires (or can afford). This development further addresses some of remote usability testing’s weaknesses. Other platforms are sure to launch copy-cat offerings soon. Live Conversation points to an ongoing tectonic collision between these two worlds. Will similar offerings change stakeholder perceptions and threaten ethnographic teams’ ability to spend as much time in the field? Will we be required to re-articulate the value of being in-place with participants?

What seems to be clear is that ethnography and UX are evolving, circling a center of gravity that exists outside of each discipline as it was conceived 15, or even 5 years ago. Lessons from archaeology point to greater tension and evolution between approaches that emphasize cultural products, quantifiable data and context-rich insights. As they intersect, hybridize, and divide, we learn more of the strengths and weaknesses of each. As we do, we have a unique opportunity to tinker as ethnographic craftsmen. As the purveyors of ethnographic value in industry, I encourage us to play with these novel forms. A method here, a new technology there and maybe older technologies too. Instead of considering ourselves experts in a method, we should think of ethnographers as craftspeople using what is around to create hybrid forms that further the excellence of their practice. It would seem to me that this hybrid, usabilified ethnography, has gained a toe-hold. We, as practitioners, should work with the strengths of this usability-ethnography hybrid to amplify the power of our craft.

Christopher Golias is a mixed methods researcher within the digital technology department of large North American retailer. He has conducted applied anthropological research across various areas including retail, healthcare, indigenous rights, substance use, mobile technology, retail, governance, and information technology. He holds a Ph.D. in Anthropology from the University of Pennsylvania.

NOTES

Acknowledgments – Many thanks to the reviewers and curators of EPIC for their thoughtful, helpful commentaries.

REFERENCES CITED

Adams, Laura, Elizabeth Burkholder and Katie Hamilton.

2015 “Micro-Moments: Your Guide to Winning the Shift in Mobile.” Think with Google website, September 2015. Accessed September 15, 2017. https://www.thinkwithgoogle.com/marketing-resources/micro-moments/micromoments-guide-pdf-download/.

Amirebrahimi, Shaheen.

2016 The Rise of the User and the Fall of People: Ethnographic Cooptation and a New Language of Globalization. Ethnographic Praxis in Industry Conference Proceedings 2016:71-103.

Baecker, R., & Buxton, W. A. S.

1987 Readings in Human-Computer Interaction: A Multidisciplinary Approach. Los Altos: Morgan Kaufmann.

Bødker, S.

2006 ‘When Second Wave HCI Meets Third Wave Challenges’, Proceedings of The 4th Nordic conference on Human-Computer Interaction: Changing Roles.

Chipchase, Jan and Lee John Phillips

2017 The Field Study Handbook. San Francisco: The Field Institute.

Clifford, J., & Marcus, G. E. eds.

1986 Writing Culture: The Poetics and Politics of Ethnography. Berkeley: University of California Press.

David, N. & C. Kramer

2001 Ethnoarchaeology in Action. Cambridge: Cambridge University Press.

Douglas, Mary

1969 Purity and Danger: An Analysis of Conceptions of Pollution and Taboo. London: Routledge and Keegan Paul.

Ensworth, Patricia

2012 Badges, Branding and Business Growth: The ROI of an Ethnographic

Praxis Professional Certification. Ethnographic Praxis in Industry Conference Proceedings 2012:263–277.

Geertz, Clifford

1973 “Thick Description: Toward an Interpretive Theory of Culture”. In The Interpretation of Cultures: Selected Essays. Edited by Clifford Geertz, 3-30. New York: Basic Books.

Goffman, Erving

1981 Forms of Talk. Philadelphia: University of Pennsylvania.

Hoy, Tom

2017 “Book Review: The Field Study Handbook, by Jan Chipchase.” EPIC: Advancing the Value of Ethnography in Industry website. Accessed September 15, 2017. https://www.epicpeople.org/field-study-handbook/.

https://www.usertesting.com/who. Accessed September 15, 2017

https://www.userzoom.com/. Accessed September 15, 2017

Jones, Stokes

2006 The “Inner Game” of Ethnography. Ethnographic Praxis in Industry Conference Proceedings 2008:250–259.

Kottak, Conrad

2006 Mirror for Humanity. McGraw-Hill, New York.

Leedy, Erin and Theo Downes-Le Guin

2006 A Sum Greater than the Parts: Combining Contextual Inquiry with Other Methods to Maximize Research Insights into Social Transitions. Ethnographic Praxis in Industry Conference Proceedings 2006:41–48.

Madsbjurg, Christian and Mikkel B. Rasmussen

2014 The Moment of Clarity: Using the Human Sciences to Solve Your Toughest Business Problems. Cambridge: Harvard Business

Review Press.

Madsbjerg, Christian

2017 Sensemaking: The Power of the Humanities in the Age of the Algorithm. New York: Hachette Books.

Ladner, S.

2014 Practical Ethnography: A Guide to Doing Ethnography in the Private Sector. Walnut Cree: Left Coast Press.

Lombardi, Gerald

2009 The De-skilling of Ethnographic Labor: Signs of an Emerging

Predicament. Ethnographic Praxis in Industry Conference Proceedings 2009:46–54.

Singer, Natasha

2014 “Intel’s Sharp-eyed Social Scientist.” New York Times website, February 15. Accessed October 1, 2017. https://www.nytimes.com/2014/02/16/technology/intels-sharp-eyed-social-scientist.html.

Willey, Gordon R., and Philip Phillips.

1958 Method and Theory in American Archaeology. Chicago: University of Chicago Press.

Wylie, A.

2002 Thinking from things: Essays in the Philosophy of Archaeology. Berkeley: University of California Press.

Usertesting.com. “2017 UX and User Research Report 2017” on Usertesting.com website, 2017. Accessed September, 15, 2017.

https://www.usertesting.com/resources/industry-reports