In this catalyst, we the authors describe the benefits of ‘scaling out’: reaching out beyond one’s organization to bring in external partners to accomplish UX research. Organizations scale out their research efforts in order to cover more ground, draw from more specialties, or conduct more research more quickly than they would be able to alone. As opposed to growing an in-house team to meeting research needs (‘scaling up’), scaling out can be a more inclusive approach to generating user insights, where the voices of diverse research partners throughout the world are brought together to produce powerful UX research outcomes. A case study example of work with suppliers and clients illustrates scaling out. Collective intelligence pushes scaling out even further, as it counts research participants, users and potential users as part of the network of partners who get work done.

Keywords: distributed collaboration, scaling out, collective intelligence, global research

INTRODUCTION

For many years, we at EPIC had to argue for the value of ethnography and of user research, more generally inside our organizations. Now that we have won a seat at the table, we are able to create stronger networks internally within our own organizations and externally, with partners outside. Recently there has been a push for ‘scaling up’ research teams within organizations. We have learned from this that it is no easy feat to go from a research team of two to thirteen (Clancy 2018); or to adapt the role of UX research as the team scales (Primadani 2019); or to prove inside organizations the value of research, while implementing processes of working cross-functionally, and to manage the actual scaling process simultaneously (Chokshi 2019).

In this paper we make the argument for the benefits of scaling out. We define ‘scaling out’ as reaching out beyond one’s organization to bring in external partners to accomplish UX research, cover more ground, to bring in more specialties and ultimately rely on collective intelligence to get work done. Scaling out is an inclusive approach that can produce meaningful results due to presence of diverse voices, including among the UX researchers working on the project. Today with more social networking and digital collaboration tools than ever before, as we describe in this Catalyst, scaling out has become manageable and efficient. We also consider power imbalances in UX research, particularly in the roles of client-supplier, researcher-participant and designer-user, and whether it is possible to scale out to such a point that we shift them. Can we as UX researchers, go from evangelizing user research and amplifying the voice of the user to collaborating continually with users – with people. We call upon the EPIC community to consider what it means to be on one side of the researcher-participant dynamic and ways to shift this while working in the realities of market economies and for-profit business sectors.

We believe this conversation on scaling out is more timely than ever in a COVID-19 world. The first half of this Catalyst tackles the practical side of today’s restrictions. As the conditions which make possible in-person research are tenuous or in flux (physical space restrictions such as lockdown, curfew, masks), we must rely more than ever on distributed local researchers, participants, and digital collaboration tools. The EPIC community will already be familiar with some of these tools and their advantages.

In the second half of the Catalyst, we take the concept of ‘scaling out’ to its logical conclusion. We speculate on a form of distributed UX research where our global partners are not local professionals, but rather “citizen researchers,” or even users themselves. We imagine scenarios where user research is conducted by crowdsourcing and collective intelligence, similar to models like Wikipedia and some COVID-19 response projects (OECD.org). This is not a new phenomenon but we believe it is more timely than ever.

BACKGROUND

EPIC practitioners have written about the obstacles and opportunities of globally distributed teams and partnership through ethnography. From the early days of EPIC, Mack and Mehta (2005) describe, “as more and more corporate ethnographic work is crossing international borders, we are increasingly collaborating with teams that are spread across the globe.” Churchill and Whalen (2005) emphasize the methodological challenges of geographically distributed projects. These include the extra effort required to build and maintain relations and empathy; widely varying experiences of ethnographic methods, local language and culture; and conflicting responsibilities and lines of accountability. At times, researchers train non-researchers to help conduct research. Di Leone and Edwards (2010) point to four key needs for knowledge sharing in collaborative ethnographic research. Brannen, Moore, and Mughan (2013) describe a project in which they acted as outside experts in collaboration with a multicultural project team of nine managers within an organization. The managers were trained in ethnographic techniques, and they conducted the data collection and participated fully in analysis. The consultants acted as advisors, trainers and coaches to the project team at every stage of the ethnographic process. Kearon and Earls (2010) describe a project that employed participants to conduct ethnography themselves where the initial batch of results were lackluster. They urge careful framing and training in order to obtain useful results.

As remote research and collaboration tools advance, so do the conversations at EPIC. Gorkovenko et al.’s paper (2019) describes a project in which ethnographers and participants were able to engage remotely in contextual inquiry around a product with the aid of sensing technology. Golias (2017) discusses how ethnography and remote usability testing can enhance one another.

Today, as travel restrictions loom due to COVID-19, these conversations take on a new sense of urgency. Henshall (2020) describes the benefits of remote research including participants being comfortable in their home environment, feeling safer sharing their point of view in a private space, reaching a larger pool of participants, scheduling flexibility, and including more stakeholders via remote observation. Drawbacks being that it is difficult to include unconnected households, people less comfortable with digital tools who then require extra support to participate, finding a private spot in a crowded household, and framing being limited. The researcher’s ability to zoom into details or zoom out for a broader view is not always possible. Evans (2020) echoes many of these sentiments when describing her own remote ethnographic research, relying heavily on diary study tools, online surveys, and participant-made videos. She offers solutions to overcome obstacles of remote research, including finding the least data heavy video conferencing tools, becoming more prescriptive in questions for participant-made videos, and balancing these data with other sources of insights. In a recapitulation of an EPIC panel from May 2020, Collier Jennings and Denny (2020) paint a picture of how ethnography evolves and how research today weaves together many different vantage points. If one view is eliminated or limited (such as, conducting in-person research) how can we use other available data points to provide a holistic picture? They also describe participant- and community-led engagement and co-creating insights.

SCALING OUT: A CASE STUDY IN DISTRIBUTED RESEARCH

Our Paris-based boutique agency, MindSpark, relies on scaling out our resources to accomplish projects. We offer UX and market research on a global scale to meet our clients’ research objectives. By scaling out we mean that we work with suppliers and clients to design and execute research by bringing in stakeholders and partners with specialized skillsets, expert and/or localized knowledge, as they are needed. Scaling out allows an organization broad reach and flexibility by relying on local experts globally.

For example we, the authors, worked on a project for a large tech company for which we conducted a user experience research study in five markets: South Korea, Bangladesh, Chile, Kenya, and Thailand. The company approached us to design the research, to collect data, and to deliver findings with our local partners. Before the client came to us, it would have been difficult to anticipate a need for resources in these markets, both internally for the client or for us as a research agency. The client did not have researchers on the ground in those countries, nor the network to contract directly with local researchers. We did not have in-house researchers on the ground full-time in those markets.

What we did have to offer was an extensive, trusted network of suppliers with whom we collaborate and could quickly enlist. When conducting user research on a global product, it often becomes necessary to “pick up” partners when a research question arises in a corner of the world little known to the researcher. We first piloted the research in Korea and the core team including two MindSpark researchers and four clients traveled to Seoul to observe and participate in-person alongside the local team, consisting of one moderator, one project manager, and a simultaneous translator. Then we, from MindSpark with the clients, worked remotely with local teams to execute the other four markets concurrently. By letting go of total ownership of this project and relying on the expertise of local researchers, we were able to gain insight into how people in those markets would want to interact with our clients’ product. We were also able to save time by running the studies concurrently.

When we scale out at MindSpark, we intentionally keep the research design flexible enough to allow local partners to tailor the methodology to their localized context. In the same study, the local Kenya team recommended conducting interviews in-person (pre-COVID-19) at local offices or cafés. In Chile, internet connection is strong enough it was deemed most appropriate to conduct remote interviews to capture a more geographically dispersed audience. The local researchers were able to provide much more cultural context than as outsiders we would have been able to glean on our own. Themes around public/private spaces, security/protection, sexuality and violence (all related to the product) emerged in conversations with the local researchers.

We often collaborate with researchers with special skillsets. In this project, we were able to work with moderators who has specific experience in the topic matter of the study and were able to provide additional insight and background knowledge for the final reports. In other instances, we might rely on a quant guru to validate or supplement the qualitative pieces we would be working on. We collaborate with colleagues specialized in visual design, UI and UX design, creative agencies who might be delivering assets that we could then test through research, inspiring creative production or testing it afterwards.

TOOLS OF THE TRADE

There are a few basic elements to MindSpark’s model of scaling out. First, we establish a core team, usually (at the very least) one researcher, one project manager, and one client point person who will see a project through for a certain period of time, usually from start to finish. This gives consistency and rigor to the work. Second, as mentioned above, we gather a network of partners with specialized skillsets or local knowledge. The core team acts as a hub that gathers and transmits information to that network. Third, we create extra layers of communication and documentation. This can include phone or video calls to review objectives and to introduce all the main players of the project to each other. This can mean separate project messaging channels (on Slack or WhatsApp, for example). It can take the form of shared calendars, google documents, collaboration boards (on Notion, Mural, Miro etc.) It means documenting objectives from beginning stages to end deliverables. When working remotely or in distributed teams, extra emphasis needs to be placed on careful communication in order to maintain shared goals and contribute meaningfully to the same output. Summarizing advances or changes in the project in emails or shared digital document spaces (Dropbox, Google docs) is crucial. To this end, templates are extremely helpful. Templates help ensure logic and consistency to note-taking, findings, and final reporting.

Ongoing conversations can occur around language choice and cultural context, particularly when testing copy or content. Cross-cultural conversations can be around screener crafting and recruitment. Perhaps the target participant is not representative or might not even exist in a particular market. Legal limitations differ across regions that will impact a research study. Even budgeting time per interview can be an issue. A user interview that might take 60-minutes in the US could run closer to 75 minutes in a different market. We usually require at least 30-45 minute buffers between remote interviews in markets where bandwidth is unreliable or where people tend to share computers and other devices and they might not be familiar with troubleshooting those particular devices.

BENEFITS OF SCALING OUT

A first benefit of scaling out is the ability to incorporate deep local knowledge into the research in order to produce a hybrid insider-outside view. By partnering with local teams, the researcher gets to come in as an outsider with a naive understanding of local conditions. The local moderator has the language and cultural background to execute the study and to assist greatly with analysis and findings generation.

By partnering, the researcher can become the hub, with a higher systems-level view. This gives us the ability to cut across organizational silos, across different markets, and across different populations of users. In another project, we worked with stakeholders from three different departments across two organizations, connected by a particular user journey we were charged with mapping. By using an ethnographic approach to study the whole system and to present that system back to stakeholders, we were able to help the organizations make strategic decisions to improve their internal processes and, ultimately, the user experience. Our advice could be considered as having less bias, due to not being part of the structure and their system of bonuses and promotions.

Another benefit is that it is less risky for us to try new approaches as outsiders. We can break the mold and experiment in ways that might be more difficult to do in-house or without a partner network. We have the advantage of multiple view points grounded in local contexts. They will be more relevant, diverse, and simply having more brains on a project with new ideas can help challenge our preconceived ideas of how a project should run.

Scaling out also has the benefit of budget precision, executing research and hiring researchers when and where they are needed, bringing in necessary skillsets or expertise in a certain geography, language, subject to answer particular questions. It has the advantage of speed, particularly when running multiple market studies concurrently. Arguably, the outcomes will be of higher quality when produced by a series of collaborators rather than a sole researcher attempting to bring all these pieces of data together alone. The quality will be enriched due to the inherent diverse nature of on the ground voices, lived experiences, and styles of approach. In the next session, we speculate on how deep this democratization of research could go in the future.

CONSIDERING COLLECTIVE INTELLIGENCE

Story Time: Multiple Viewpoints

Imagine a particularly enterprising traveling ant trying to inspect an elephant, an animal it has never seen before. But the elephant is too big to see from such a small viewpoint. The ant may only see a hoof and think the elephant is in fact a rock, something it has seen before. But what if this ant brought more ants to crawl around, under and on top of the elephant to report back and create a 360-degree view? If it multiplies its viewpoints it can not only minimize movement but save time and spend more time observing from its chosen spot. “Oh, wow, this rock moves from time to time! Danger!” Better yet, this upstart ant could ask a local anthill for help. They know their elephants!

Figure 1. Elephant and Ant, cartoon image, 1948, commons.wikimedia.org public domain

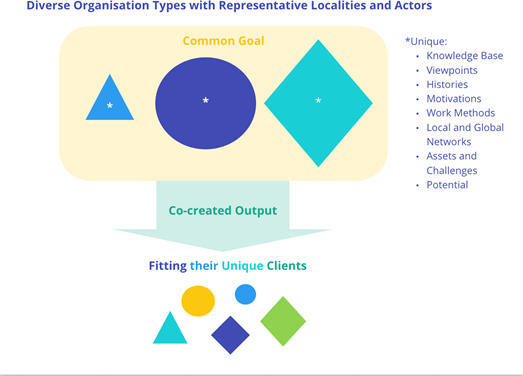

At MindSpark, we are continually pushing our projects to be more inclusive, more collaborative, and to bring clients, partners, and participants together in meaningful ways. We look to collective intelligence as one potential model for expanding the roles, responsibilities and voices that participants bring to projects. What could it look like if we narrowed the distinction between researcher and subject, and subjects became simply informant-researchers reporting from everywhere, particularly in this moment in time when digital tools are widely available and travel restrictions and social distancing orders prevent researchers from going to multiple markets to conduct in-person research? Collective intelligence is a distributed versus centralized model for advancing towards a goal (Lévy 1981). It relies on diversity of view point, motivation, lived-experience and knowledge, auto-organisation and convergence of ideas of actors in an existing or newly formed network, community or subset of the population.

The quality of the collective intelligence can be enhanced by ensuring as much of a flat hierarchical structure as possible (including relations with partners and reduced contract leverage pressure), lean process controls that do not throttle input or research style, or encouragement of self-organization by different parties. Collective intelligence is not homogenization and delegation of directives (how and what) to multiple parties but rather empowerment, collaboration and trust in individual skills and abilities to create their own how and what before it merges back to aid the common goal. If the end project design is then implemented by those in the collective intelligence project, they are more likely to fully implement and grow the following product (Nguyen et al 2019).

As applied to UX research, perhaps a simple example of this is conducting research or collecting feedback about a product in the precise moment when user motivation is high. In the moment when, let’s say, a person is looking at real estate listings online and is struggling with the user interface, for example. They are motivated to improve the interface or to even provide context around their search. Bolt and Tulathimutte (2010) describe the importance of recruiting for moments of motivation.

We can flip the intention of many usability and user tests. Rather than testing whether a designed product is desirable and usable by a target population, we can design products to be imbued with meaning, improved and challenged by the very people who have the interest in using it. We can be utilizing open source tools for data collection and to produce insights. For example, Stamen Design, a data visualization and cartography studio in San Francisco offers tools and visualizations that are meant to be picked up and improved by the people they are representing. One of their projects, Field Papers which launched in 2012, “allows people to create a multi-page atlas of anywhere in the world. Once you print it, you can take it outside into the field, record notes and observations about the area you’re looking at, or use it as your own personal tour guide in a new city.”

Collective Intelligence in Action: Crowdsourcing

Figure 2. Collective intelligence as applied to UX projects in which clients, suppliers and participants work together to produce knowledge that eventually influences product-service offerings. © Sheila Suarez de Flores.

If we consider our participants to be collaborators, can we imagine if we not only scaled out our internal teams to include external partners, and to include users, but also to include the whole world of citizens happily collaborating on our projects?

Crowdsourcing is “the act of taking a job traditionally performed by a designated agent and outsourcing it to an undefined, generally large group of people in the form of an open call” (Howe 2008). Currently this is already happening in open initiatives such as openCovid19 and others across the globe to tackle COVID-19 related challenges. We would be remiss to not mention Wikipedia which is arguably the most well-known example of collective intelligence (Malone, Laubacher, Dellarocas 2009). NASA is collaborating with “citizen scientists” in various projects such as landslide reporting (Cooperative Open Online Landslide Repository), comet discovery (The Sungrazer Project) and even finding a new planet or “Planet 9” (a project with over 62,000 participants).

This may all seem a bit futuristic but in fact crowdsourcing or other open collaborations across “organizations” is quite ancient. Even trees use it! In Peter Wohleben’s book (2016), The Hidden Life of Trees: What They Feel, How They Communicate, he explains that trees communicate through the air, using pheromones and other scent signals. Such as in sub-Saharan Africa, the wide-crowned umbrella thorn acacia will emit a distress signal scent when being chewed upon to warn neighboring trees. These trees then change their leaf composition to become deadly to herbivores. Luckily (or unluckily for trees) herbivores, such as giraffes, have learned to eat down wind (Grant 2018).

Creating Citizen Researchers

In order to start harnessing collective intelligence, research organizations might typically examine existing organic data artifacts from the customer community, or widen their data stream to include inputs from external partners. But collective intelligence is not fully realized without a group of human actors working together toward some common goal and/or framework. To that end, we propose creating “citizen researchers”: a pool of citizens (including potentially customers) engaged and motivated to work towards finding a research result or solution for a target user.

Citizen researchers would do for UX research what citizen scientists have done for environmental science since at least the 1990s, when the term was coined, if not for millennia. Alan Irwin, a sociologist now based at the Copenhagen Business School, defined citizen science both as “science which assists the needs and concerns of citizens” and as “a form of science developed and enacted by the citizens themselves” (Irwin 2018).

A paper by Nguyen (2019) on the practice of developing citizen research projects outlines these key steps to begin:

- Identify the research question and the communities of participants.

- Decide on incentives to engage participants: “a combination of both extrinsic motivators such as authorship and access to the data and intrinsic motivators such as making tasks enjoyable, offering participants the opportunity to gain new knowledge and finding meaningful outlets for their skills.”

- Determining methods to evaluate solutions created by collective intelligence and decision making.

It is important to know that to get optimum results from the above process, and despite conventional wisdom, the reflex should be to include everyone (citizen researchers and all partners) as much as possible through the entire project process including setup and synthesis. The efficiency gained is in the bespoke output unique to the diverse parties that create it and not necessarily in the reduction of interactions.

Be aware of the balance between evaluation and pure creation when we let diverse voices collaborate in order not to filter out pertinent results, or representative minorities. Once the above project foundation is complete, the team (internal/external) still should adjust it in iterations based on the feedback of included citizen researchers. The next steps are to select and onboard a small subset of representative citizen researchers that will help complete the project structuration, solidify the goal, and set up the framework or platform for input and tasks by crowds collaborating with you. A clear ongoing communication plan is also key to onboard and keep everyone engaged (Nguyen et al 2019).

Use Cases for Citizen Researchers

Like all solutions there is never, and should never, be a one-size-fits-all framework. Here are some use-cases where ‘scaling out’ is expanded to include citizen researchers.

- To study hard-to-reach populations. For example, you want to approach an elderly population with unstable access to the internet, and who are hard to travel to. One solution is to form a team of citizen researchers (probably composed of concerned citizens or more connected relatives) to help, with permission of the target subjects, document parts of the daily lives of the target subjects.

- To create loyalty and foster innovation. For example, a business could create a platform for citizen researchers to share parts of their lives (permission required) using a product or service, with the incentive of joining a community and improving a product and/or service they care about. Within this platform, there could be a system of suggestion and upvote much like on Reddit or Threadless to collaborate on the synthesis and solution emergence mode.

- To ensure buy-in of proposed solutions. This may be the case especially for social innovation research where lives are trying to be saved or improved, such as building public spaces and services (e.g. health campaigns). Not only should the citizens that will be using the solution be invited to collaborate but the recognized leaders (official or not) (Nygun et al 2019). This is also called the Ikea Effect, where customers often are more attached and likely to use an item if they made it themselves (Norton, Mochon, Ariely 2011). Participatory design, an approach that includes a range stakeholders (employees, customers, users) in the design process to ensure the end results meet their needs, is not new. However, again due to social media and digital tools, the co-production process can be diversified and enhanced today (Devisch, Huybrechts, De Ridder 2019).

COLLECTIVE INTELLIGENCE: AVOIDING ETHICAL TRAPS

Once you start seeing collective intelligence it is hard to stop seeing it all around you. It is everywhere in many different scales, cross-sections and flavors. The cells in our body and bacteria in our gut are even considered a form of collective intelligence (see: big brain or swarm intelligence). Bees do it! Birds do it! Computers do it (AI)! And now UX researchers can do it even more, especially due to technology. We cannot help but hypothesize that this also has the hidden benefit of promoting research best practices and critical thinking. A whole new world of researchers!

Still there are some ethical traps to avoid. The key one is the use and abuse of our citizen researchers if they treated as “cheaper labor”. We do not want to turn them over to the gig-economy where collaborators, often from marginalized or lower income communities are reliant on incentives and “fired” at whim. Gray and Suri (2019) describe the invisible labor that powers Silicon Valley, such as manual image recognition and data sorting.

We as researchers can avoid creating an unethical power balance by properly paying and ensuring longevity of contract and support at the end when citizen researchers create this as their full or even partial time gig. Otherwise, monetary rewards could be avoided while leaning into other extrinsic or intrinsic rewards such as esteem and community (e.g. NASA).

Another ethical trap is any sort of research or collective intelligence analysis done on data where the respondents are not fully aware of the treatment of their data or its goal. It may be tempting to create a platform where people share about their day or other people’s with a simple use and terms statement that participants click through without reading. It is advised to not only make the project clear from sign-up, including the goal and purpose in main marketing and how the data will be used.

The last and most important tip is for “researchers using collective intelligence to show their results…evaluate… and be transparent about mistakes.” Rigorous evaluation of collective intelligence is necessary to provide evidence of its usefulness to stakeholders, “so that it gets recognised and funded properly” (Nguyen et al 2019). But we would add, also to be able to adjust and learn through the process, create loyalty and reaction from participants. And, most importantly, at the core why we do it, in order to best serve our citizen researchers and target users.

WHERE DO WE GO FROM HERE?

Scaling out can be done to degrees. Scaling out can mean bringing in external consultants to execute a project. It can include distributed teams within an organization. It can be webs of networked partners that assist at various points in a project for different purposes (various geographic regions, language, expertise). It can be pushed further to include users, customers, and everyday “citizens” as creators and sources of data.

There are certainly challenges to scaling out. In our MindSpark model, we have found that challenges can arise around ownership of proprietary or internal processes. There can be procurement challenges of all these external resources and it can be less predictable or manageable how all these external sources will come to participate in one organization’s or one team’s research plan. More effort to communicate, with the aid of digital tools, is needed when working externally and across distributed groups. For those with concerns of data ownership and confidentiality agreements, this legal aspect is a bit more complicated. Not all data can be shared openly. There are privacy and confidentiality restrictions to be considered.

The future is unknown, it would be foolish to say with any certainty how collaboration will take shape in these times of COIVID and/when/if Post-COVID-19. But what seems certain is we should make an effort to try new forms of partnership globally and to take advantage of ones that already exist but in other contexts. As many of us are working remotely, with travel restrictions, it seems logical to tap into distributed knowledge sources. This can be an opportunity to rethink our relationships to internal and external partners, and to our participants. It can be an opportunity to rethink the who even can be a participant or researcher. We can imagine distributed collaboration models that include the user/participant, and shift the power imbalance of researcher-participant by giving participants a seat at the table and engendering authentic empathy through continued interaction.

Alicia Dornadic is a design anthropologist at MindSpark. She has ten years of experience in user research in the realms of work, health, social media, transportation, and public safety. alicia@mindsparklab.com

Nikki Lavoie, Founder of MindSpark Research International, is a spirited and intuitive qualitative UX researcher who translates her passion for understanding people into strategic insight. She has focused on combining ethnographic and digital techniques during her years spent in the US and now as an expat living in Paris.

Sheila Suarez de Flores is a product design and business transformation coach, passionate about ecological and social responsibility, circular design and systems thinking. She has experience in various sectors and sized businesses (local to global). She is an expert in agile, lean, distributed teams, workshop creation, facilitation, and collective intelligence. me@sdeflores.com

Elvin Tuygan is a senior design anthropologist at MindSpark with over 15 years of global strategic experience, passionate about all things digital, from gaming to social networking. She has studied, lived and worked around the world, including Boston, Rabat, London and Istanbul and has now settled in the South of France. elvin@mindsparklab.com

REFERENCES CITED

Bolt, Nate and Tony Tulathimutte. 2010. Remote Research: Real Users, Real Time, Real Research. Brooklyn: Rosenfeld Media.

Brannen, Mary Y., Fiona Moore, and Terry Mughan. 2013. “Strategic Ethnography and Reinvigorating Tesco Plc: Leveraging Inside/Out Bicultural Bridging in Multicultural Teams.” Ethnographic Praxis in Industry Conference Proceedings: 282-299.

Chokshi, Monal. “How to Succeed as a UXR Manager: Capitalizing on your Strengths as a Researcher.” Strive: The 2019 UX Research Conference.

Churchill, Elizabeth F. and Jack Whalen. 2005. “Ethnography and Process Change in Organizations: Methodological Challenges in a Cross-cultural, Bilingual, Geographically Distributed Corporate Project.” Ethnographic Praxis in Industry Conference Proceedings: 179-187.

Clancy, Charlotte. 2018. “Scaling the Deliveroo User Research Team.” Accessed September 22, 2020. https://medium.com/deliveroo-design/elevating-the-impact-of-our-research-3267068937fe

Collier Jennings, Jennifer and Rita Denny. 2020. “Where is Remote Research? Ethnographic Positioning in Shifting Spaces.” Ethnographic Praxis in Industry Conference Perspectives. Accessed, September 22, 2020. https://www.epicpeople.org/where-is-remote-research-ethnographic-positioning-in-shifting-spaces/

Cooperative Open Online Landslide Repository. NASA. https://gpm.nasa.gov/landslides/

Devisch, Oswald, Liesbeth Huybrechts, and Roel De Ridder. 2019. Participatory Design Theory: Using Technology and Social Media to Foster Civic Engagement. New York: Routledge.

Di Leone, Beth and Elizabeth Edwards. 2010. “Innovation in Collaboration: Using an Internet-Based Research Tool as a New Way to Share Ethnographic Knowledge.” Ethnographic Praxis in Industry Conference Proceedings: 122-135.

Evans, Chloe. 2020. “Ethnographic Research in Remote Spaces: Overcoming Practical Obstacles and Embracing Change.” Ethnographic Praxis in Industry Conference Perspectives. Accessed September 23, 2020. https://www.epicpeople.org/ethnographic-research-in-remote-spaces-overcoming-practical-obstacles-and-embracing-change/

Golias, Christopher A. 2017. “The Ethnographer’s Spyglass: Insights and Distortions from Remote Usability Testing.” Ethnographic Praxis in Industry Conference Proceedings: 247-261.

Gorkovenko, Katerina, Dan Burnett, Dave Murray-Rust, James Thorp, and Daniel Richards. 2019. “Supporting Real-Time Contextual Inquiry through Sensor Data.” Ethnographic Praxis in Industry Conference Proceedings: 554-581.

Grant, Richard. 2018. “Do Trees Talk to Each Other?” Smithsonian Magazine. Accessed September 22, 2020. https://www.smithsonianmag.com/science-nature/the-whispering-trees-180968084/

Gray, Mary and Siddharth Suri. 2019. Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass. New York: Houghton Mifflin Harcourt.

Henshall, Stuart. 2020. “Recalibrating UX Labs in the Covid-19 Era.” Ethnographic Praxis in Industry Conference Perspectives. Accessed September 23, 2020. https://www.epicpeople.org/recalibrating-ux-labs-in-the-covid-19-era/

Howe, Jeff. 2008. Crowdsourcing: Why the Power of the Crowd is Driving the Future of Business. New York: Crown Business.

Irwin, Aisling. 2018. “No PhDs Needed: How Citizen Science is Transforming Research.” Nature. Accessed September 22, 2020. https://www.nature.com/articles/d41586-018-07106-5#:~:text=The%20phrase%20’citizen%20science’%20itself,enacted%20by%20the%20citizens%20themselves%E2%80%9D.

Just One Giant Lab. Open COVID19 Initiative. https://app.jogl.io/program/opencovid19

Kearon, John and Mark Earls. 2009. “Me-to-we Research: Digital Characters.” ESOMAR Congress 2009: Leading the Way.

Lévy, Pierre. 1981. L’intelligence collective: Pour une anthropologie du cyberspace. Paris: Éditions François Maspéro.

Mack, Alexandra and Dina Mehta. 2005. “Accelerating Collaboration with Social Tools.” Ethnographic Praxis in Industry Conference Proceedings: 146-152.

Malone, Thomas W., Robert Laubacher, and Chrysanthos Dellarocas. 2009. “Mapping the Genome of Collective Intellgience.” MIT Center for Collective Intelligence. Accessed September 22, 2020. http://www.realtechsupport.org/UB/MRIII/papers/CollectiveIntelligence/MIT_CollectiveIntelligence2009.pdf

Nguyen, Van Thu, Bridget Young, Phillippe Ravaud, Nivantha Naidoo, Mehdi Benchoufi, and Isabelle Boutron. 2019. “Overcoming Barriers to Mobilizing Collective Intelligence in Research: Qualitative Study of Researchers With Experience of

Collective Intelligence.” J Med Internet Res. Vol 21. Iss. 7. 8-9. Accessed September 22, 2020. https://www.academia.edu/39881568/Overcoming_Barriers_to_Mobilizing_Collective_Intelligence_in_Research_Qualitative_Study_of_Researchers_With_Experience_of_Collective_Intelligence

Norton, Michael, I., Daniel Mochon, and Dan Ariely. 2011. “Ikea Effect: When Labor Leads to Love.” Science Direct. Accessed September 22, 2020. https://www.sciencedirect.com/science/article/abs/pii/S1057740811000829

OECD Policy Responses to Coronavirus (COVID-19). 2020. “Crowdsourcing STI policy solutions to COVID-19.” Accessed September 22, 2020.

Primadani, Luky. 2019. “Strategies in adopting and scaling user research within 1.5 year.” UX Collective. Accessed September 22, 2020.

https://uxdesign.cc/strategies-in-adopting-and-scaling-user-research-within-1-5-year-ad7ca28b3490

Stamen Design. Field Papers. Accessed September 23, 2020. https://stamen.com/work/field-papers/

Wohleben, Peter. 2016. The Hidden Life of Trees: What They Feel, How They Communicate – Discoveries from a Secret World. Vancouver: Greystone Books.