Government websites and online services are often built with limited input from the people they serve. This approach limits their ability to respond to ever changing needs and contexts. This case study describes a government digital team built from the ground up to embrace ethnographic methods to make government services more resilient.

The case study begins by tracing the organisation’s origins and relationship to other research-driven parts of its government. Then it shows how the organisation’s structure evolved as more projects included ethnography. It describes various approaches to locating skilled researchers within bureaucratic confines, as well as what responsibilities researchers took on as the organisation grew. It then summarises researchers’ experiences with matrixed, functional and hybrid organisation schemes.

The case study concludes explaining how embracing ethnographic approaches (and values) increased not only online service, but also organisational resilience. Teams who embraced ethnography had deeper and more thoughtful responses to the pandemic, and inclusivity challenges in the organisation. Lessons learned for other organisations attempting to scale an ethnographic research practice, and seize its benefits for resilience.

THE EMERGENCE OF GOVERNMENT DIGITAL SERVICES

Since the early 1990s, governments around the world have invested in digitising public services with the aim of decreasing the cost and increasing the quality of public services for citizens. Yet over this time it has become increasingly clear that digital governments have not delivered all the benefits that were initially hoped for by its users (Mergel 2017). One reason for this shortcoming is a lack of consideration of the needs and behaviours of citizens in the planning, development and delivery of public services. As a response to this gap, several public sector teams have increasingly looked to the toolkits of design thinking and user-centred design to place the citizen “user-experience” at the forefront of public service delivery (Clarke and Craft 2017). And yet, over the past decade, the results have been mixed, with some governments such as the U.K, New Zealand, and Singapore succeeding in their efforts to deliver improved service outcomes more than others (United Nations 2020).

In the Government of Canada, early approaches to introduce user-centred design into the public service largely took the form of embedding design generalists in policy development teams to inform the front-end of policy design. At that period, the skill sets of designers were seen as a toolkit that could help with policy development, rather than as a skill set primarily relevant for service delivery (Michael McGann, Tamas Wells and Emma Blomkamp 2021). In this role, designers led workshops, projects and interventions with an overall aim to build “empathy” amongst public servants towards the needs of the people their policies served. While the expertise introduced fresh thinking and recommendations to increase citizen involvement in early policy planning phases, the outcomes didn’t go far enough to change the culture of government decision-making to impact day to day citizen-facing service delivery (Hum and Thibaudeau 2019).

The following case study will show how the introduction and evolution of a central digital service unit in the Government of Canada in 2017, Canadian Digital Service (CDS), and its approach to hiring researchers evolved in response to the increasing demand for an ethnographic research lens to improve how government understood the needs of citizens receiving their services online. And in turn, why it also proved to be effective in building team and organisational resilience at a time of unprecedented change in the delivery of urgent online services at the start of the COVID-19 global pandemic.

DESIGN THINKING AND USER-CENTRICITY IN THE GOVERNMENT OF CANADA

The creation of CDS in 2017 was a response to, and equally influenced by, a history of the Canadian public service engaging with digital services and the need for user-centred design that goes back to the early internet era.

As the 2013 Fall Report of the Office of the Auditor General on Access to Online Services (“OAG”) shows, when “Government of Canada services began to be migrated online in the late 1990s and early 2000s, Canada was seen as a world leader. Leadership in customer service and efforts in providing its citizens with online offerings were two of the main reasons cited for the government’s success” (Office of the Auditor General of Canada 2013). However, the same report goes on to critique the government for losing this early momentum. A later report from the OAG found that one of major reasons for the decline in quality of Canada’s digital services was a lack of importance given to the needs of the users of government services. In the words of the report, “It is critical for government departments to understand that their services need to be built around citizens, not process—or they can expect that those services will be disrupted” (Office of the Auditor General of Canada 2016).

The truth of these words was directly felt by public servants themselves in 2016 with the federal government’s large-scale and ongoing IT failure of the Phoenix payroll system (May 2022). Amongst the many lessons learned was the lack of testing the new service with real users before its launch. This situation cost the government over $400-million to repay federal public servants as part of continuing compensation for damages, and cost taxpayers more than an estimated one billion dollars, in addition to significantly disrupting the pay of thousands of public servants (May 2022).

Efforts to widen exposure to the citizen experience began a decade earlier. In the 2010s, traditionally siloed government public engagement teams—responsible for consulting and engaging citizens and stakeholders—began improving links across government with the creation of communities of practice. The goal was to renew their ability to be innovative and build a more flexible, knowledgeable member base. It was around the same time that people were looking for inspiration from the growing number of public sector innovation units from other governments such as Denmark’s MindLab, U.K’s NESTA, and UNDP’s Innovation Labs (McGann et al 2018). In particular, how they were able to embed design talent and expertise to expand the traditional public consultation playbook.

Pursuing the promise of social innovation labs, in 2013 a government-wide initiative known as Blueprint 2020 was launched with the aim of public servants “working together with citizens, making smart use of new technologies and achieving the best possible outcomes with efficient, interconnected and nimble processes, structures and systems” by the year 2020 (Privy Council Office 2013). As a result, a series of policy innovation-focused “Hubs and Labs” were set up to grow the practice of “co-designing” policy and program solutions with citizens and stakeholders and documenting what works to support learning and replication. Outcomes and lessons were subsequently shared at an annual “Innovation Fair” held at the National Capital Region, Ottawa. Examples of government reform projects came from teams including the Privy Council Office’s Central Innovation Hub (now Impact and Innovation Unit), Health Canada’s iHub, Indigenous Service Canada’s Indigenous Policy and Program Innovation Hub, Immigration Refugees and Citizenship Canada’s Pier SIX – Service Insights and Experimentation, Canadian Coast Guard Foresight & Innovation Hub and more.

While creating momentum and awareness of the need for a more nimble style of working, the following years proved to show assorted achievements. For many, the scale of their effort was evidently limited to those within the boundaries of the lab, creating an “us versus them” culture of people who seemed privileged to hold a title of being an “creative innovator” and those who continued to to represent an outdated style of working. Another critique discussed the placement of labs as separate entities within an organisation, removed from the day to day pressures facing the organisation’s core functions, and therefore removed from the realities of what it takes to create lasting change (Hum and Thibaudeau 2019). Perhaps the most glaring limitation was the disparity between the knowledge and involvement in early-stage policy making versus the practical implementation of the policy with people’s lived experience. By virtue of being semi-autonomous entities creating short bursts of co-design projects with sprinkled consultations at the front end of policy making, this resulted in an imbalance in the strategizing around accountability, applicability and implementation of said problems (Barnes 2016).

All the while, the need to increase citizen satisfaction and demonstrate measurable outcomes meant that similar governments were looking to play catch-up to rising expectations of what “digital transformation” could bring for the public sector. The U.K’s Government Digital Service showed targeted results in creating efficiencies and meeting client needs (Greenway et al 2018) . Closely followed by the Obama Administration’s U.S Digital Service, Australia’s Digital Transformation Agency, and Canada’s own Ontario Digital Service at the provincial level to lead the strategic implementation of each government’s digital agenda (How the Canadian Digital Service Started 2017). Collectively, these efforts proved that the time was right to start exploring what a Canadian approach to digital government could look like.

GROWING THE RESEARCH PRACTICE AT THE CANADIAN DIGITAL SERVICE

The Canadian Digital Service (CDS) was created in 2017 within the central federal department of the Treasury Board of Canada Secretariat to “demonstrate the art of the possible” and build digital capacity for Federal departments (Elvas 2017). Initially founded as a three-year pilot, in 2019, the team received additional funding to deliver government enterprise platform services and continue partnering with departmental teams to increase digital skills and capabilities. In 2020, with the shifted focus to support the federal pandemic response, CDS’s budget was doubled and, in 2021, the organisation was established as a permanent federal program (Budget Implementation Act 2021) to scale its impact and reach.

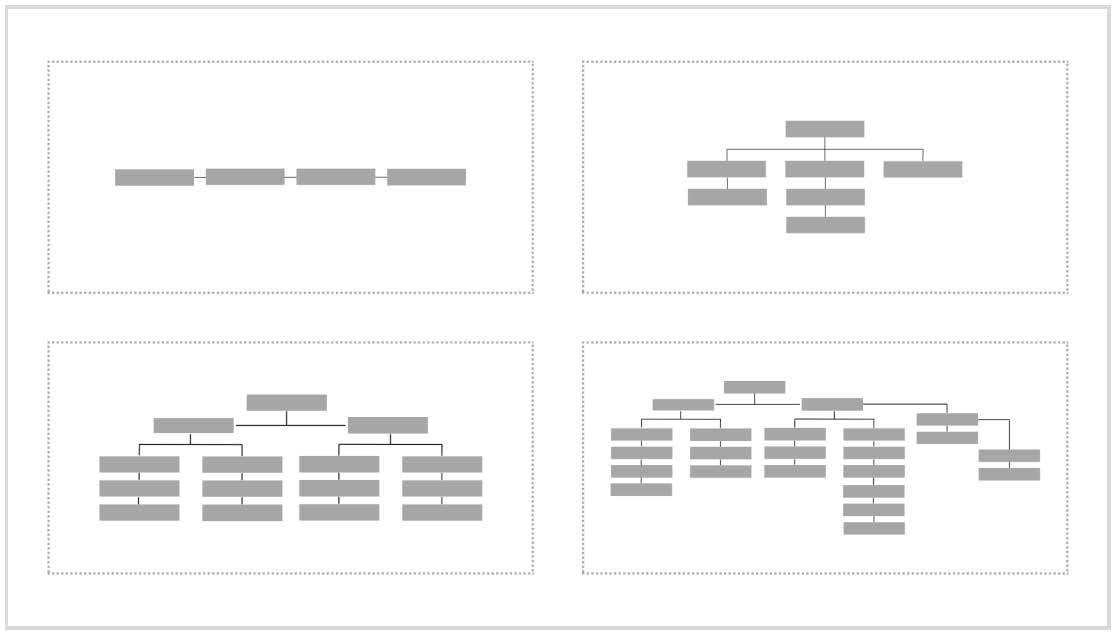

Over CDS’s five-year evolution, its approach to embedding research and ethnography evolved over the course of its growth. This process can be broken down into five phases, each bringing its own definition, goals and challenges.

The first phase can be characterised as a “team of one.” CDS hired its first—and for over six months, only—researcher, one of this paper’s authors, to help bring the team along on how a service can be built based around user needs, not governments. This early phase was lean and scrappy. As in any team of one, the researcher performed several roles—planner, designer, researcher and advocate. And the mission was singular: to begin shifting data and insight generation from a traditional top-down framework to a more ethnographic style, surfacing a bottom-up layer of evidence based on people’s experience of government.

The second phase can be described as promotional. Armed with the success of early research engagements with the Department of Veterans Affairs (Ferguson 2018), the Department of Immigration, Refugees and Citizenship Canada (Lorimer, Hillary and Naik, Mithula 2018) and others, the organisation emphasised the value of working in the open and hosted several research-focused meetups and workshops for public service teams. This phase also saw an increase in the hiring of designers, front-end developers and a second researcher to collectively make a user experience team. The challenge now was in balancing the growing interest from partners’ in helping unpack bigger research questions with the limited capacity of research on the team.

The third phase personified growth. There was a steady flow of requests to conduct ethnographic research on people’s complex relationship to government services including with members of the armed forces, Veterans, newcomers to Canada, low-income taxpayers and disability benefit applicants (Canadian Digital Service 2019). This momentum and progress showed the need for greater craft-based guidance and leadership. Following which, the first research manager, one of this paper’s authors, was hired to establish the research team. It was also at this stage that other disciplines such as design and development had grown in size, necessitating an expanded organisation structure. It was here that a matrix-style framework was developed, resulting in the research team reporting to the head of product delivery and researchers reporting to multiple leaders.

The fourth phase saw the maturity of research practice, both in the frequency, breadth and operations. Research was now built into every product phase and the key decision points of product development (Lee 2020). Participant recruitment emphasised the need for diversity in language, literacy, access to technology and disability. Research with end-users was critical, alongside research with public servants administering the service. Shareable artefacts, method toolkits and templates were prioritised to educate and guide people along the process. It was not entirely a surprise then, that the growth in research skills had an inverse relationship to the level of enthusiasm with the fast-paced agile process. Researchers were tired of feeling limited to shipping usability findings when the data was pointing to deeper structural concerns in service design.

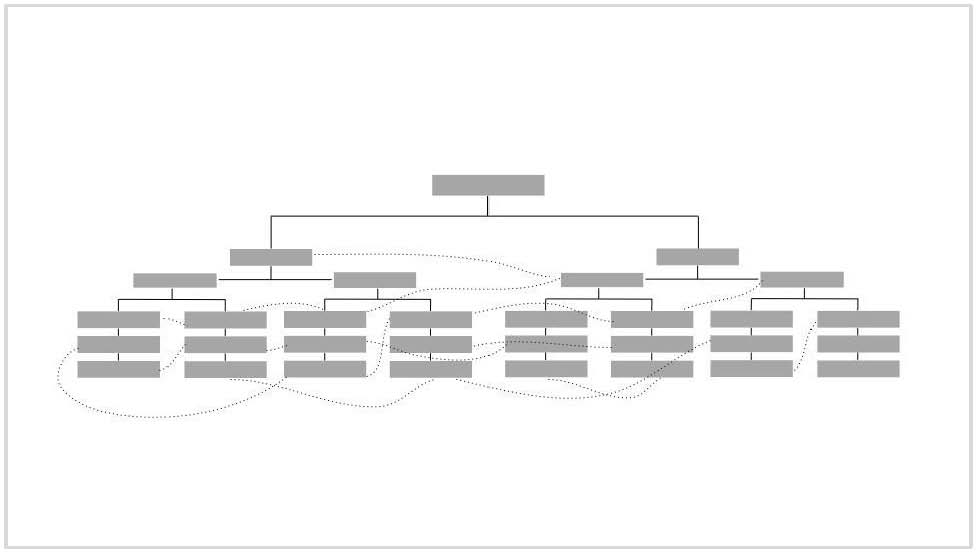

And finally, the fifth and current phase represents research’s integration to various functions in the organisation. As a result of scaling to over a hundred staff, and in an effort to improve efficiencies, the organisation shifted from a matrix to a divisional organisation structure. One division delivers enterprise platform components for federal teams to adapt and reuse, where the researchers are embedded in product teams. The other unit is a consultancy providing bespoke guidance and coaching to federal teams. Here, the researchers are consulting strategists. In both units, researchers shifted from reporting to a research manager to likely a non-research manager. Researchers were also hired in new places. For example, the platform unit’s client experience team brought in researchers to consider the end-to-end client journey. In the consulting unit, the skillsets of researchers were sought across teams.

Transitioning to this new mode of management was not trivial. CDS team members had built up substantial identities around their discipline-specific communities. Senior managers also had to give up managing people just in their discipline, and change their scope of leadership (often stretching their professional skills). Importantly, the research team did not make this switch alone: engineers, designers and product managers all saw the end of their discipline-specific groupings and gained new management. Research “diffused” into the organisation as part of a broader effort that diffused many other job types.

Today’s challenge is an obvious one—how will researchers embedded in various parts of the organisation maintain a sense of community, connection and tell a shared story? Is it relevant to do so as one group? Is it more impactful that they leave research crumbs across disciplines and areas of the organisation. The next phases will tell.

Figure 1. Diagram by Mithula Naik, 2022. Continuous organisational shape-shifting since 2017 — from “family-style”, to “matrix” to “business units”.

Figure 2. Diagram by Mithula Naik, 2022. In the fifth and current organisational structure, research is integrated into various functions, enabling trails of connection across the organisation.

TENSIONS WITH THE GROWTH AND ADAPTATION OF ETHNOGRAPHIC METHODS AT CDS

As CDS’ ethnography practice grew and diffused, it created interesting organisational tensions. Researchers found themselves in conflict with developers, designers and leaders. Overtime, these conflicts fell along predictable lines, and exemplified elements of cultural and epistemological theory from the ethnographic world. The following section details three types of conflict common as CDS progressed. It will provide examples of the conflict, explain them through theory, and offer some perspective on their “usefulness” to the organisation’s growth.

Conflict 1: Emic vs. etic perspectives

“Why can’t we just call this person what he is—a user?” one developer exclaimed to one of this paper’s authors. On one hand, researchers often advocated using the language of users to describe themselves and their activities. On the other hand, developers and product managers tried to apply their own categories to the people at hand. The language of “customers,” “users” and “stakeholders” was non-specific, but common in their professional communities. This felt like a conflict between emic and etic perspectives: using the researcher’s language and categories versus a users’ (Alasuutari 1995). Stepping back from this language is harder for some than others.

With time, the authors of this case study came to see the “word wars” as emblematic of a deeper conflict—one about the perspective taken when trying to describe a group of people. Many CDS team members made sense of their field by developing their own taxonomies and applying them to the subject at hand. In a given situation, they searched for “users” and “providers,” “transmitters” and “receivers.” Although research interviews challenged them to better understand these peoples’ behaviour, it was harder for them to escape the categorization schemes through which they viewed people. These schemes helped bring order to complicated situations, and, to some degree, a bit of comfort in a turbulent environment.

Yet, over time, more and more CDS staff embraced the language of the people their work was serving. With constant campaigning and reminders, the dreaded “user” fell out of favour, replaced by words more specific to the digital product at hand. Software developers, in particular, seemed to realise that their expertise did not hinge on calling people certain words. Rather, those broad categories often obscured the nuances of the people behind the keyboard. Perhaps this indicated that non-researchers seemed more practised taking on the language of others in some basic ways. Indeed, this tension seemed to be a “growing pain” or introducing ethnographic methods to an organisation used to other methods. But as this tension released, others seemed to take its place.

Conflict 2: The time orientation of leaders and researchers

What matters more: A grounded understanding of a project’s past? What are the needs now? What is the future vision? These questions of “time orientation” (Seeley 2012) are key elements of culture, but also became key fault lines for project teams. As the organisation grew, many a CDS team became embroiled in internal debate about which of these questions to focus on. Interaction designers tended to focus on sketching visions of the future, product managers became focused on the current state of the project and its tasks. When teams had dedicated researchers, they often became the team historian, trying to trace the journey of the team’s thinking (and relationship with users).

Far from being simple prioritisation decisions, these conflicts ran deep and caused substantial tension on teams. People often felt that their time orientation was “right” and forcefully advocated for above others’. Sometimes these debates became matters of professional and personal integrity. Far from a simple attentional choice, they became markers of identity and culture.

Arguably, these tensions still exist at CDS. But as individual roles (like “researcher” or “product manager”) faded from prominence, these tensions did too. When people did not identify as a “researcher,” they did not seem to feel as committed to maintaining that group’s identity by advocating a certain time orientation. Arguably, these debates about time orientation (and other cultural dimensions) were more prominent in times when the organisational structure created specialisations. Unlike the “word wars,” this tension seemed levered by organisational choices, championed by managers.

Conflict 3: The epistemological assumptions of developers vs. ethnographers

Underneath both the “word wars” and cultural conflicts, was a deeper schism in assumptions. These conflicts bubble up in questions like “How can you trust research based on only 5 users?” or statements like “These are opinions, but how about the facts.” Although these views often came from the organisation’s external partners (who were new to the practice), they also came from the “inside”: other members of staff sometimes sceptical of researcher’s activities.

At the root, many software developers in the organisation were positivists. That is, they believed that with the right measurement tools (website analytics, experiments, software), CDS could discover a singular truth about what users needed. As one developer once told one of the authors, “I want to build a simulation which will show us the single right way to design this website for all of the people.”

On the other hand, the growing group of ethnographic researchers had a more complicated relationship with “the truth.” They advocated uncovering layers of details and additional complications, instead of simplifying a group into a single statement of their needs. Although some researchers would describe their work as trying to show “reality,” very few of them would use phrases like “find the single truth.”

Although the authors did not describe it this way at the time, these conflicts are ultimately epistemological ones: core disagreements about valid ways to produce knowledge. Ladner (2016) describes these conflicts in her seminal work on workplace biography: ethnographic practitioners sometimes come into contact with more “factist” colleagues, who struggle to make sense of this different approach to research.

But beyond spawning squabbles, what did this (and other) ethnography-induced tension do to CDS as a group? These three tensions could be summarised as “the culture of professional ethnography” meets “the culture of progressive software development.” One focused on using pre-existing categories, the other trying to use new categories. One focused on questions of the past, the other on the future. One interpretivist, one positivist.

Like members of any two cultures coming into contact, CDS staff had a variety of reactions. Some seemed to further retreat into their ways of being and knowing, displaying less interest in others as time went on. Others became boundary actors, adept at speaking the language (and explaining the methods) of people on different sides. They engaged in a kind of “code switching” that enabled them to work across these boundaries.

Interestingly, people who were adept at bridging researcher and developer culture, were also good at opening and navigating other identity-involved discussions. When the murder of George Floyd opened discussions into intersectional oppression in Canada (as well as the U.S.), researchers and their advocates were active participants. They seemed able to consider different ways of seeing and knowing. Perhaps this generalisation of ethnographic ways of seeing and knowing (at least among some staff) is even more valuable than using an ethnographic toolkit to improve software.

THE FUTURE OF CDS AND ETHNOGRAPHY WITHIN IT

Despite (or perhaps with the help of) the tensions of ethnography, Canada’s digital service team continues to become further institutionalised. CDS recently received additional funding, as well as permission to hire permanent staff. Several CDS products gained wide- and large-scale adoption. The organisation’s role within its home department also seems increasingly stable: new top-level executives have come and gone and CDS remains. And although CDS retains several original team members, many of its key staff have also come and gone, creating turnover across all teams, including researchers.

CDS retains a core group of researchers with an ethnographic-bent. Although they are now scattered around the organisation (in the “divisional model” described above), they retain influence over products in the organisation. Divisional leaders have chosen to hire researchers (even if they are not researchers themselves). CDS, and its commitment to ethnographic methods, seem here to stay.

IN CONCLUSION: LESSONS LEARNED FOR OTHER ORGANISATIONS

What are the lessons learned for others attempting to implement ethnography throughout an organisation? Although the particulars of Canadian government, public interest technology and CDS’ particular staff make it difficult to generalise, the authors note:

Organisations rolling out ethnographic methods broadly should prepare for deeper conversations about epistemology. Our experience suggests that rolling out procedures and approaches alone does not yield the impact organisations hope for. The philosophical basis of ethnography matters, and CDS might have been better served by deliberately introducing it to the organisation.

Organisations hiring teams of ethnographers (or ethnographically-influenced researchers) should actively prepare for the culture they will bring. Professions are not simply sets of practices; they’re a whole set of cultural practices and rituals. Leaders trying to bring these people into an organisation should expect not only disagreements about methods, but about basic vocabulary and cultural orientation.

Organisations hiring ethnographers should also attempt to set their expectations appropriately. One of the beauties of ethnographic methods is their tendency to help people zoom out, and grasp many different nuances of a problem. But within a government service (and likely other bureaucratic organisations), even if you grasp all the elements of the problem, you may only be able to fix one or two. As ethnographic views hit government realities, CDS management could have down-adjusted expectations. You can change government to serve people better; but you can’t change all of government, to serve all the people, all at once.

Most importantly, introducing ethnographic methods at CDS helped both researchers and non-researchers think more flexibly. Exploring different ways of speaking, and different ways of knowing, enabled the team to produce impactful services. It also enabled the team to adapt to changing conversations and world conditions. In other words, the tensions were not only worth it—they were part of what made the change worthwhile.

What organisational model works the best? How would we recommend other organisations? In true ethnographic fashion, we do not conclude with an obvious recommendation for others embarking on a similar journey. We have shown the myriad, organisation-specific factors that drove CDS’ evolution. Instead of making a blanket recommendation, we suggest other researchers examine the details of their organisations and ask themselves: what problem can a new structure solve? And how?

Colin MacArthur is an adjunct professor in the Department of Management and Technology at Universita’ Bocconi in Milan, Italy. He was previously the Director of Digital Practice, and Head of Design Research at the Canadian Digital Service. Previously, he has worked as a designer and researcher at the Center for Civic Design, and 18F, the U.S. federal government’s design consultancy.

Mithula Naik is the Head of Platform Client Experience and Growth at the Canadian Digital Service (CDS), a central digital services unit in the Government of Canada focused on delivering simple, easy to use services for all Canadians. In her role, Mithula works closely with government departments and agencies in building public facing platform services that uplift the needs of users and, as a consequence, improve the quality of public services and people’s experience of government. Prior to CDS, Mithula ran design-led interventions to improve policy, program and service delivery at the Privy Council Office’s Impact and Innovation Unit. Mithula’s career spans India and Canada, where she has worked with startups and household technology brands such as Nokia, Xerox and Hewlett-Packard in shifting towards human-centred product development to enable broader impact for the betterment of society.

REFERENCES CITED

Alasuutari, Pertti. 1995. Researching culture: Qualitative method and cultural studies. Sage.

Barnes, Jeff. 2016. The Rise of the Innovation Lab in the Public Sector. Marine Institute of Memorial University of Newfoundland.

Canadian Digital Service. 2017. Beginning the Conversation: A Made-in-Canada Approach to Digital Government.”Accessed August 1 2022. https://digital.canada.ca/how-cds-started/full-report/

Clarke, Amanda and Craft, Jonathan. 2017. The twin faces of public sector design. Wiley Governance. DOI: 10.1111/gove.12342 accessed August 14 2022.

Elvas, Pascale. 2017. Why Canada needs a digital service. Accessed August 1 2022. https://digital.canada.ca/2017/08/03/why-canada-needs-a-digital-service/

Ferguson, Ross. 2018. Teaming up to deliver better outcomes for Veterans. Accessed August 1 2022. https://digital.canada.ca/2018/07/24/teaming-up-to-deliver-better-outcomes-for-veterans/

Greenway, Terrett, Bracken and Loosemore. 2018. Digital Transformation at Scale: Why the Strategy is Delivery. Perspectives.

Hum, Ryan.,and Thibaudeau, Paul. 2019. Taking the Culture out of the Lab and Into the Office: A “Non-Lab” Approach to Public Service Transformation. Insider Knowledge, DRS Learn X Design Conference. https://doi.org/10.21606/learnxdesign.2019.09011

Ladner, Sam. 2016. Practical ethnography: A guide to doing ethnography in the private sector. Routledge.

Lee, Adrianne. 2020. Listening to Users: Improving the COVID-19 Benefits Finder Tool. Accessed August 1 2022.https://digital.canada.ca/2020/07/06/improving-our-covid-19-benefits-finder-tool-using-a-feedback-text-box/

Lorimer, Hillary and Naik, Mithula. 2018. Reschedule a citizenship appointment: Empowering applicants and frontline staff. Accessed August 1 2022. https://digital.canada.ca/2018/04/13/reschedule-a-citizenship-appointment/?wbdisable=true

May, Katheryn. 2022. ‘Can’t be fixed’: Public service pay issues persist, six years after Phoenix was launched. Ottawa Citizen website. Accessed August 1 2022 https://ottawacitizen.com/news/local-news/cant-be-fixed-public-service-pay-issues-persist-six-years-after-phoenix-was-launched

McGann, Michael and Blomkamp, Emma and Lewis, M Jenny, 2018. The rise of public sector innovation labs: experiments in design thinking for policy. Policy Sciences, Springer; Society of Policy Sciences, vol. 51(3), pages 249-267.

McGann, Michael and Wells, Tamas and Blomkamp, Emma 2021. Innovation labs and co-production in public problem solving. Public Management Review, Taylor & Francis Journals, vol. 23(2), pages 297-316.

Mergel, Ines. 2014. Digital Service Teams: Challenges and Recommendations for Government (IBM Centre for the Business of Government). Business of Government website accessed August 14 2022. https://businessofgovernment.org/sites/default/files/Digital%20Service%20Teams%20-%20Challenges%20and%20Recommendations%20for%20Government.pdf

Office of the Auditor General of Canada. 2013. Report of the Auditor General of Canada. Chapter 2 Access to online services. https://publications.gc.ca/collections/collection_2013/bvg-oag/FA1-2013-2-2-eng.pdf. t www.oag-bvg.gc.ca. accessed August 14 2022.

Office of the Auditor General of Canada. 2016. Message from the Auditor General of Canada. https://www.oag-bvg.gc.ca/internet/English/parl_oag_201611_00_e_41829.html accessed August 14 2022.

Privy Council Office. 2013. Blueprint 2020. Government of Canada website. Accessed August 1 2022. https://www.canada.ca/content/dam/pco-bcp/documents/pdfs/bp2020-eng.pdf

Seeley, J. R., R. A. Sim, and E. W. Loosley. 2012. “Crestwood Heights: A Canadian Middle Class Suburb”.” Sociology of Community: A Collection of Readings 5: 218.

Department of Economic and Social Affairs. 2020. United Nations E-Government Survey 2020. publicadministration.un.org. Available at: https://publicadministration.un.org/egovkb/Portals/egovkb/Documents/un/2020-Survey/2020%20UN%20E-Government%20Survey%20(Full%20Report).pdf. Accessed August 14, 2022.