This case study by a pan-African UX research and design agency offers key insights for companies attempting to...

This case study by a pan-African UX research and design agency offers key insights for companies attempting to...

New deployments of foundational AI models in digital products have expanded public engagement with synthetic...

This paper asks what we owe to our teams and our informants when we engage in research with and about people....

Mandatory reporting laws require the reporting to a designated government agency of a known or suspected case of...

This paper introduces an theoretical and interpretive tool, the Process of Argumentative Aphasia, for...

There are myths and misconceptions around the objectivity of quantitative research and the neutrality of tech and the two are linked. At best they lead organizations to embrace half-truths, and at worst they result in discrimination. By embracing our humanity and using our own subjectivity to critically examine the ways we research, we can prioritize our work in a way that aligns with ethical values and brings humans to the center.

Ethnographers are not time travelers, but we may be close. Our frameworks and methodologies develop a nuanced understanding of how relationships, processes, and objects evolve over time. This 'temporal expertise' is key to enacting our ethical responsibility to the past and future, says...

Learn models and principles to ensure organizations are creating, using, and deploying AI that coworkers, customers, and society can trust. Overview Our lives are directed, enriched, influenced, and sometimes...

Overview To do ethical, equitable work in any domain, we need robust tools for assessing and addressing power. Whether we’re creating products, services, or policies, inequities can create direct and indirect...

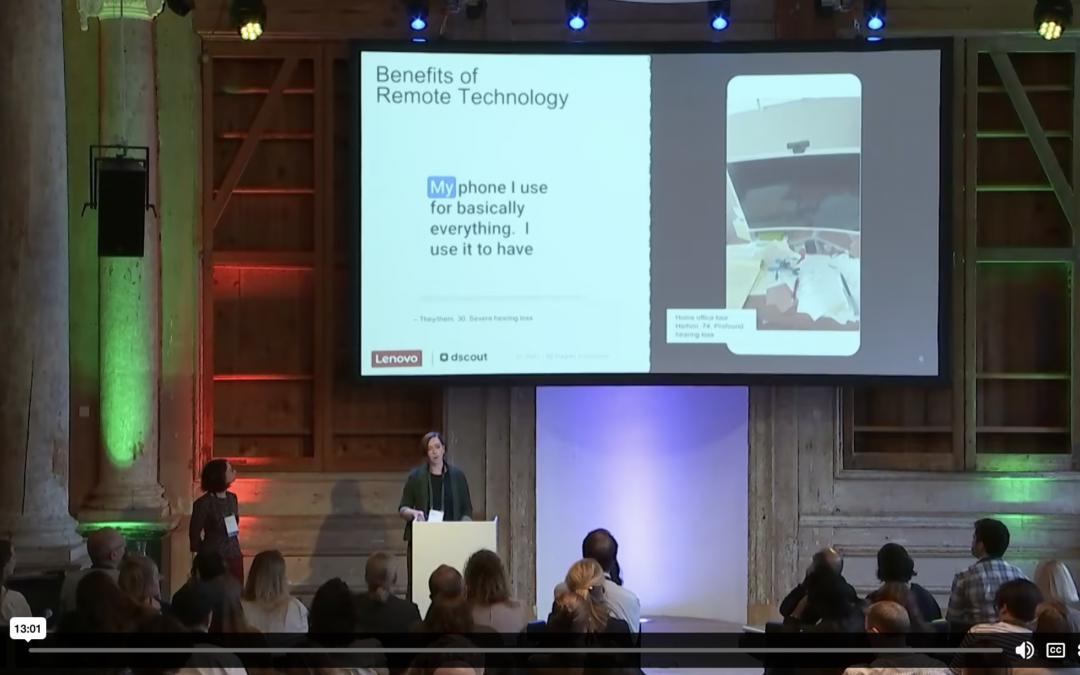

This case study examines how researchers at Lenovo and dscout partnered to conduct a mobile ethnographic study on the technology experiences of individuals who are d/Deaf and hard of hearing, with the goal of...