This case study examines how researchers at Lenovo and dscout partnered to conduct a mobile ethnographic study on the technology experiences of individuals who are d/Deaf and hard of hearing, with the goal of making their products and research practices more accessible and inclusive. The study revealed common frustrations and pain points people experience when using their every-day technology. The researchers also learned valuable research design and operations lessons related to recruiting participants who are d/Deaf and hard of hearing, providing accommodations, and establishing an accessible research environment. This case explores the benefits of mobile-forward research design, and the additional considerations and adaptations necessary for collecting both asynchronous and synchronous data from individuals who have hearing loss and who have different communication modes and preferences, including American Sign Language. The authors discuss how more inclusive research informs product design, which can make Lenovo and dscout products more accessible for everyone, regardless of ability.

INTRODUCTION

In this case study, we share the story of a research partnership between two businesses – global technology company Lenovo and the online research platform dscout – that joined forces to study the unique technology experiences and obstacles of individuals with hearing loss. In our efforts to explore the lived experiences of our participants, we were challenged to interrogate and adapt our research design and ethnographic practices to be more ethical and inclusive. Design equity for these organizations has been, and continues to be, an important factor to demonstrate ethical and responsible corporate citizenship in the areas of diversity, equity, and inclusion. This case study is a proof-of-concept that research can contribute meaningfully – and is in fact integral – to these efforts and adds to the business case for more generative ethnographic studies in organizations of all shapes and sizes.

Lenovo: Smarter Technology for All

When invoked in many business settings, the terms diversity, equity, and inclusion (DEI) commonly focus on matters related to human resources, an organization’s workforce, and the development of an inclusive organizational culture. This is especially true at Lenovo, a Fortune Global 500 company that has built on its success as the world’s leading PC player by expanding into new growth areas of infrastructure, mobile, solutions, and services. In 2005 Lenovo acquired IBM’s PC division, which created one of the most diverse and multicultural businesses of that time, and leaders worked diligently to develop one inclusive corporate culture (Qiao and Conyers 2014). Since then, the role of DEI has developed into brand purpose for Lenovo, with its vision of leading and enabling “Smarter Technology for All” to create a better world. To support this vision, leaders established the company’s Product Diversity Office (PDO) in 2020, which has been the authority on embedding DEI into the processes of product design and development. Through the Diversity by Design review process, products are validated by inclusive design experts to ensure usability for a diverse customer base, and to minimize any inherent bias. This systematic approach creates opportunities for our researchers and designers to think about the critical perspectives of users who might be missed when products are considered. To verify that our products work for everyone, regardless of abilities or physical attributes, Lenovo’s goal is to have at least 75% of our products through this review process by 2025 (Lenovo Group Limited 2021).

To support these DEI efforts, in 2021 researchers on Lenovo’s User Experience Design team began conducting research initiatives with users with disabilities to better understand their everyday experiences with the technologies they rely on, and the challenges they face with those technologies. The first was a generative study conducted on the technology use of people with visual impairments. This study would have typically been conducted in-person and in the field to best capture how they used their tech for work and learning. However, due to pandemic-era safety concerns and restrictions, this was not possible. We needed a solution that would allow us to safely engage with users and capture data from their natural environments, and the mobile ethnography app dscout provided this. In the post-project debrief, the lead researcher passed along valuable feedback to the dscout development team regarding accessibility pain points blind users experienced using the dscout app. In turn, dscout responded with an eagerness to make adjustments to their platform and followed up with our research team to learn more about our own experiences doing research with members of the disability community.

Dscout: Pursuing Platform Improvements

Dscout is an end-to-end mobile ethnography platform that connects researchers to real people, in their real contexts via unmoderated asynchronous qualitative questionnaires and longitudinal diary studies. Throughout the years, dscout has also run various studies with our own participant pool on how to improve the user experience of its own app to make it accessible and inclusive. A study that was run with gender non-conforming participants informed an overhaul of how the platform collects and stores gender data, and a companion study ran with participants of color prompted the team to shift the wording and storage of race and ethnicity data. Dscout now seeks to expand their understanding of their user base by learning about participants with variant accessibility needs, in hopes of moving toward a platform that is inclusive and usable for all.

An Accessibility Research Partnership

Due to our organizations’ mutual commitment to creating better experiences for users, passion for inclusive research, and the desire to learn more from members of the disability community, we decided to collaborate on a new accessibility project. We turned our attention to another often-overlooked segment of people – individuals who are d/Deaf1 and hard of hearing (DHH). Neither company had previously conducted studies with users with hearing loss, so there was much to learn. And given the prevalence of disability related to hearing loss, focusing on this community is indeed a worthwhile effort. According to the World Health Organization, 430 million people in the world need rehabilitation for their hearing disability, and 25% of those over the age of 60 are impacted by disabling hearing loss (World Health Organization 2021). We developed a two-phased study design modeled after Lenovo’s study with individuals with visual impairments, which started with a mobile diary study and was followed by in-depth interviews.

RESEARCH GOALS

The goals for this research initiative were multi-fold. Both organizations recognize that more inclusive research informs product design, which can make products more accessible for all users, regardless of ability. So a key goal was to gain insights on how to make our products more accessible for individuals who have hearing loss, which in turn could benefit all users. This is commonly referred to as the “curb cut effect,” where disability features benefit far more people than for whom they were initially designed (Blackwell 2017). For example, curb cuts in sidewalks were originally designed for wheelchair users but are used by individuals pushing baby strollers or delivery workers using a dolly to move heavy boxes. As researchers, we also acknowledged from the start that we didn’t know what we didn’t know about conducting research with the DHH community. As such, another key goal was to adapt our research design and practice to be inclusive and equitable, taking into consideration the different contexts and needs of our participants. These goals were driven by a broader goal of learning more about the lived experiences of individuals who are d/Deaf and hard of hearing as they use their technology for work, learning, and day-to-day tasks.

To accomplish these goals, we devised four research questions to guide our study:

- What kind of tech setups do individuals who are DHH utilize in their everyday lives?

- What are the challenges inherent in using technology as someone with a hearing loss?

- What design features assist in using technology for the DHH community, and what design features create barriers to use?

- What design advice do users from the DHH community have for designers at tech companies?

RESEARCH METHODS

Participants

To be eligible for the study, participants had to be 18 years or older, identify as having a hearing-related disability, and use digital technology regularly for their work, learning and/or personal tasks. In order to recruit users who had varying degrees and forms of hearing loss, applicants were asked to identify their type of hearing loss (e.g., sensorineural, conductive, auditory processing, or mixed), the age they started experiencing hearing loss, and the kinds of assistive-hearing tools they used.

After screening over 5,000 applicants through both dscout’s participant panel and via a third-party recruiter, we ended up with 23 participants or “scouts”‘ who qualified for and completed the study (a total of 36 were invited). These participants were selected to represent a wide spectrum of hearing loss, as well as the varying types of assistive hearing devices they used. Along gender distribution lines, 13 identified as female, 8 identified as male, and two identified as nonbinary; their ages ranged from 21 to 70 years old. The majority were employed either full or part time, with two noting full-time status as college students. A plurality of the sample self-identified as having “moderate” hearing loss (Table 1), and more than half reported experiencing their hearing loss from birth and/or before age 18 (Table 2). No users who completed the study reported the onset of their hearing loss after the age of 44, even though seven participants were between the ages of 45-70. Among those who used assistive-hearing devices, 14 used hearing aids and four had cochlear implants.

Table 1. Degree of hearing loss2

| Degree of hearing loss | Number of participants |

|---|---|

| Moderate | 11 |

| Severe | 7 |

| Profound | 5 |

Table 2. Onset of hearing loss

| Age range | Number of participants |

|---|---|

| At birth | 10 |

| After birth – age 17 | 7 |

| Between ages 18-29 | 4 |

| Between ages 30-44 | 2 |

Design

The study was carried out in two sequential stages. First, we carried out a mobile diary study with our full sample of 23 participants. After analyzing this initial data, we invited a subsection of those users to participate in hour-long in-depth interviews probing more in depth on their initial responses in the diary study. We lay out our methods, and their rationale, in detail below.

Diary Study (sort of)

The first stage of our project consisted of a mobile unmoderated study using the dscout Diary tool. We use the term “diary” as a shorthand for our method, but it might be better described as a media-rich contextual survey. The study at hand consisted of five disparate qualitative research activities (called “Parts”), which participants filled out via their mobile phone at their own pace over the course of 2 weeks. These Parts were, in order:

- Background and consent: Telling scouts more about the mission and asking various questions about how they prefer their data to be used.

- Your Tech Space: Participants tell us about the space where they use technology frequently.

- Your Devices: Participants show us all the devices they use on a daily basis.

- Great Design, Bad Design: Scouts tell us more about the highs and lows of the technology they use.

- Challenges and Final Thoughts: We ask about challenges scouts face as someone who’s D/deaf or hard of hearing and ask them their final thoughts to close out the study.

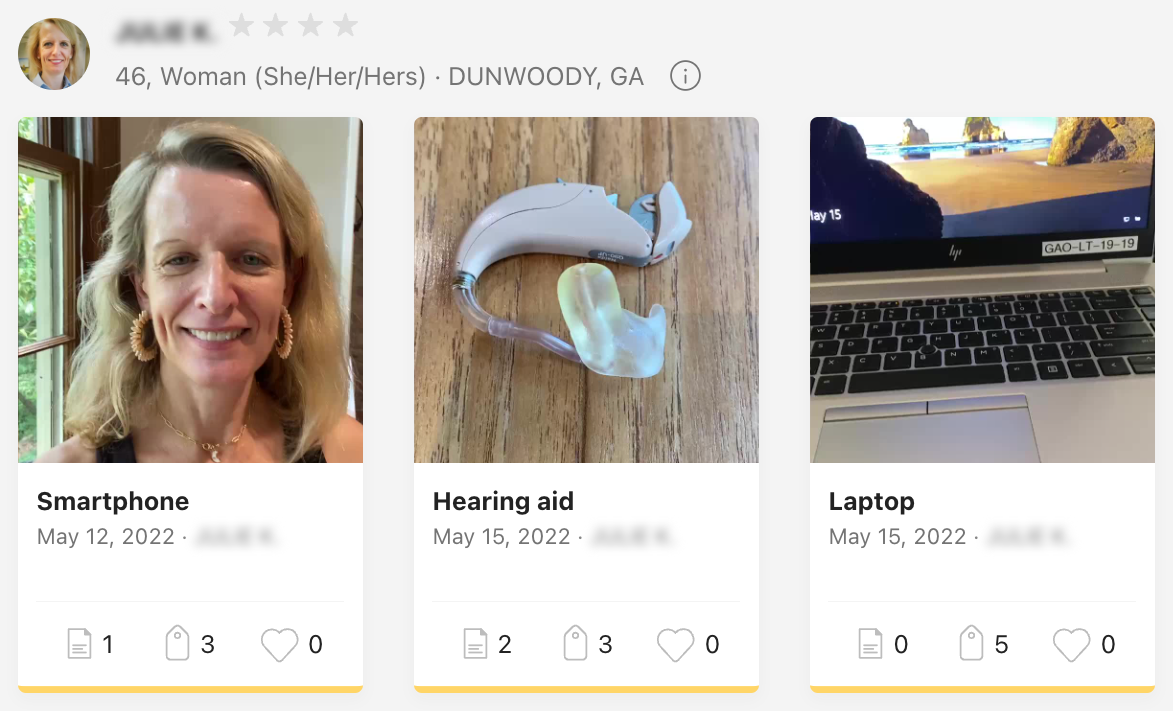

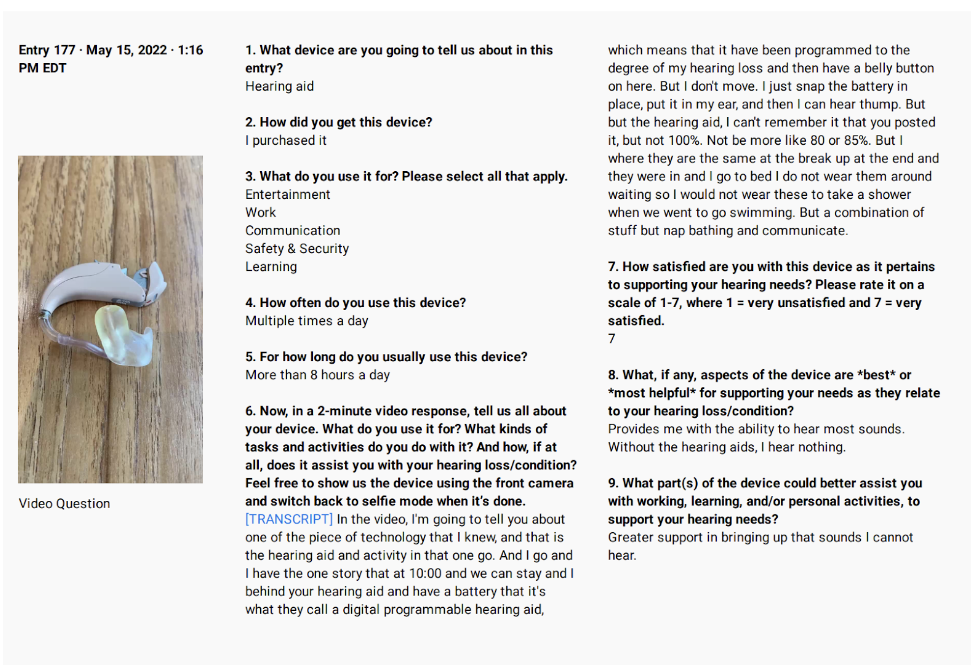

Dscout’s platform allows participants to complete activities more than once, allowing them to submit multiple “entries,” detailing as many tools as they had, and more for each design example they wanted to talk about (Figure 1). In Parts 3 (“Your Devices”) and 4 (“Great Design, Bad Design”), participants submitted multiple entries going into great detail about individual devices and design elements (Figure 2). We were careful in these Parts not to define too closely what we meant by “tool” or “design.” Avoiding close description allowed us to scaffold participant video responses (and ensure detailed information) while organically allowing trends that were naturally important to float to the top.

Figure 1. Birds-eye view of devices shown by participant in Part 3.

Figure 2. Example of in-depth questionnaire about devices listed in Part 3.

The Diary study’s aim was to understand the context of our participants’ lives on a higher level. We analyzed the data with an eye for context of their lived-in spaces and most-used devices. We also used close-ended data collected within the questionnaires to understand relative prevalence of the technologies being used, as well as the frequency and severity of tech-based design barriers and challenges. Once we completed the diary analysis and distilled high-level themes, we moved on to the interview portion of our study.

In-depth Interviews

From the 23 participants who completed the unmoderated diary mission, we selected nine individuals to participate in live, one-on-one, interviews. Interview participants were selected to capture a range of diverse experiences based on differing degrees of hearing loss, as well as a distribution across ages, genders, and ethnicities. After studying their responses from the diary mission, we developed a moderator guide that aimed to dive deeper into information scouts shared in their diary mission. Topic areas explored participants’ work/home tech set up, the assistive technologies and tools they valued most, technology barriers they experienced that related to their hearing loss, and what they envisioned as their perfect device. The interview data were used for a more in-depth follow-up on the initial themes highlighted in the diary study: these data were coded extensively for key themes. Videos and verbatims from both elements of the study, as well as graphs and charts from the diary study, were ultimately used when building our insights.

FINDINGS

While the participants in our study experience their hearing loss in a variety of ways, our results suggest a pattern of common communication challenges that DHH users have when using their technology. Several pain points rose to the top, and we found that the DHH community expressed the greatest need for the following.

Improvements in Video Calling and Live Digital Meetings

Understanding and communicating with others in live, online meetings was the most common source of discomfort, frustration, and exclusion that users discussed. DHH users need improved technical accessibility in these environments, as well as greater understanding of their circumstances and needs from hearing individuals who share their online space. Users shared a variety of examples of challenges in these environments. These included difficulty with lip reading when video quality is poor, the connection lags, or individuals turn their cameras off, and managing multiple screens/streams with chat, captions, and video to keep up with conversations. Some deaf individuals who use Video Relay Services (VRS) and Communication Access in Real Time (CART) services noted they were not able to run these on one device and had to set up a second device (such as a laptop or tablet) to see their interpreters and/or transcribers. For those who sign, some platforms will not recognize them in “speaker” mode because it reacts only to audio (versus motion). DHH users can also struggle with following along with calls with multiple speakers or when people do not speak one at a time.

“None of [my colleagues] know anything about communication with DHH individuals. Some of them always speak at a million miles a minute and it’s so annoying since AI can’t keep up and I can’t understand them. Most people at my company hate turning the camera on as well, even if they are speaking, so I can’t speech read to make sure the closed captioning and the audio is correct.”

– She/her, 37, severe hearing loss

“When I am on a phone call or when I am on a meeting that isn’t a video meeting the biggest challenge is the fact that people without hearing loss don’t think to speak clearly and they often speak over each other. I don’t know how to explain to people that video meetings would work best for me without sounding rude.”

– She/her, 42, moderate hearing loss

“Even though I can comprehend 90% of what is said on a call because I wear headphones that have good speakers, I still miss what is said at times, especially if the person isn’t looking directly at the camera or turned away or looking down or away to read notes. There is a challenge then.”

– He/him, 51, severe hearing loss

“If I’m at a public place, background noise may be an issue. If I’m working outside without a monitor, sometimes it’s inconvenient to keep a window open with captions while paying attention to something else, for example during a videoconference.”

– They/them, 25, moderate hearing loss

Captions and an Improved Caption Experience

The users in our study rely heavily on captions when using their technology, and these are especially crucial for their understanding and participation in online conference calls for work, learning, and entertainment. As one user with profound hearing loss told us, captioning is “so, so important to my life as a hard of hearing individual. This accessibility feature enriches my life, my quality of life, and I use it for learning and entertainment.” But captioning tools and features are far from perfect. Our participants shared a variety of challenges with captions, such as inaccurate captions (generated from automatic speech recognition apps), captions being out of sync with video, obtrusive or distracting placement of captions on the screen, and worst of all – no captions provided at all.

“Automatic speech recognition (ASR) is provided on some social media sites and websites, but is not accurate and can be very off-putting with inaccuracies. ”

– She/her, 41, severe hearing loss

“The only feature I use sometimes is the closed captioning for the hearing impaired. Sometimes it helps, other times it is confusing as it lags behind what is actually being said or talked about. So, it is hit-or-miss.”

– He/him, 51, severe hearing loss

“[captions] are specifically stuck on the bottom. So, I’m having to bounce up, back and forth between the interpreter and the captions.”

– He/him, 61, profound hearing loss

“The [online platform] meetings do not offer closed captioning…And so a lot of times I quite honestly, even with my hearing aids, I miss what’s been said. But if I’m listening to a video or music or something like that, it gives me the option to do closed captioning because…part of it is panic that I’m going to miss out on what’s been said. But another part is the reality that I just don’t capture the speech like everyone else does. And so closed captioning is absolutely important for me to be able to participate and follow along.”3

– She/her, 52, moderate hearing loss

DHH users want native and accurate captions to use when speech and audio are the primary modes of communication online. And they want to be able to activate captions on their own and adjust their placement to suit their needs and use cases. Offering captions and improving captioning features for better accuracy and customizable placement would benefit not only DHH users, but anyone who uses captions (e.g., a student who is a non-native language speaker and learning a new language, an employee in a loud environment, or a parent watching a video with a sleeping child nearby).

Hearing-Assistive Device Compatibility

Participants also told us they need improved device compatibility between their hearing-assistive devices – their hearing aids and cochlear implants – and their computers. Many laptops lack the ability to connect directly to these devices via Bluetooth, which is a feature that many smartphones offer. Bluetooth was the device feature that was most valued by the users in our study, and they discussed how both the presence and absence of Bluetooth impacted their tech experiences.

“My cochlear implant connects directly to the phone when I take calls. And that’s been really great. The one big complaint I have about the N-7 [model implant] is that it does not connect to my computer or to my [tablet]. And so that in itself has become frustrating because I still have to use my mini mic.”

– She/her, 27, profound hearing loss

“If my hearing aids could connect to my [digital] photo frame and my…tablet or my laptop – sounds are always clearer if they go directly through my hearing aids instead of them picking up the external sounds.”

– She/her, 66, moderate hearing loss

“Also, maxing out the volume on my computer is sometimes not enough if I’m playing a video or sound byte. I’ll have the volume maxed out, and it’s still not loud enough for me. So if the sound went straight into my hearing aids, this would solve things!”

– She/her, 41, moderate hearing loss

“I can’t use headphones…So that’s why I don’t work in a public space… I would love it if I could find a way to hook up my laptop to my hearing aids. That would make my life so much easier. And I would be able to possibly try working in public spaces.”

– She/her, 27, moderate hearing loss

LEARNINGS: ACCESSIBLE RESEARCH DESIGN

While we identified goals for this study and articulated research questions specific to the tech experiences of DHH users, we also recognized an important internal, reflective goal for ourselves as researchers: to explore ways to make our research design and operations more inclusive and challenge any unconscious biases we may have as hearing researchers who had only, up until this point, conducted research with hearing participants. Through this process, we amassed valuable learnings about designing more accessible research, particularly in the areas of recruitment, providing accommodations and options for participants, and working with platform limitations. In this section, we explore three key areas of consideration for working with the DHH community.

Recruitment Considerations

Finding the Right People: Recruiting Across the Disability Spectrum

The first key consideration we gave thought to was, how do we find the right people for the study? While the question may seem straightforward, it is important to resist the urge to over-simplify for the sake of speed or efficiency. As disability experts and advocates remind us, “If you’ve met one person with a disability, you’ve met one person with a disability” (Lu and Douglis 2022). Disability is not one size fits all, and individuals who have similar disabilities or conditions can have vastly different lived experiences, needs, and preferences. Based on Lenovo’s experience working with individuals who have visual impairments, we understood the need to develop screening criteria that would help us identify the wide spectrum of hearing loss that individuals have, as well as the unique needs of DHH users.

Even before developing the screening criteria for the study, we conducted desk research to make sure our study design was as inclusive as possible from the beginning. Drawing on resources such as published articles, informational and training materials, and the work of disability experts, we educated ourselves on topics related to the different types and degrees of hearing loss, the assistive technologies and services DHH people use, the preferences and various forms of DHH communication, and Deaf culture. This homework was critical in preparing us to better understand the unique needs and preferences that DHH users have. As a result, we were able to avoid a “one size fits all” mindset and accommodate each participant’s individual needs.

Niche Recruits

Another key consideration of recruitment was how to address the logistical challenge of recruiting for a niche population. Although ~15% of the US population reports having some kind of hearing impairment, only 2% have debilitating hearing loss (NIH 2021.) We also anticipated people with severe hearing loss may be unlikely to be a part of existing research pools if other platforms or researchers don’t give proper accommodations for their participation. This turned out to be true: when we began recruiting using dscout’s internal panel, we received 5,000 applications, only 36 of which met our criteria. Of those 36, 32 identified as having “mild or moderate” hearing loss while only four identified as D/deaf.

We addressed this anticipated challenge through several strategies. First, we set an incentive higher than dscout’s standard recommendation for a study of this scale. We were also prepared with several different recruitment strategies. In addition to using dscout’s internal pool, we enlisted a third-party recruiter for a targeted recruitment aimed specifically at those with severe or profound hearing loss. We also supplemented with an internal network of recruits at dscout and Lenovo.

These strategies combined were ultimately successful. However, they did take substantially longer than a less challenging recruit might. Our recruitment phase lasted three weeks from the launch of our initial screener to our final addition to our project.

Signaling an Accessible Space: Preparing for Future Accommodations

The final recruitment question we asked ourselves: how do we leverage the recruiting process to build and signal an accessible research environment? To this end, we also included questions in the screening questionnaire to better understand a user’s preferred way(s) of communication so they could provide inclusive response options and accommodations for participants when designing the diary study. For example, applicants were asked, “How do you prefer to convey your ideas when communicating with people in a virtual environment?”, and could then select all that applied from a pick list that included sign languages, sign language interpreter, text/typing, speaking, voice carryover, and hearing carryover. Similarly, applicants were also asked how they understood other people when communicating in a virtual environment.

The addition of these communication needs and preference questions proved critical in both preparing questions for the unmoderated diary study, as well as coordinating accessibility services for our research operations in the form of American Sign Language (ASL) interpreters and Communication Assisted Realtime Translation (CART) live captioning, that would be needed in the live interviews and data analysis. Asking these questions up front also signaled to potential participants that they would have proper accommodations, helping to establish a sense of trust early on.

Considerations of Medium

Why Remote-Forward Design?

For those coming from a background of in-person ethnography, using a remote method – especially an unmoderated survey-based method like a mobile diary study – could raise concerns. Diary studies are a somewhat piecemeal approach to ethnography and could feel like ‘resilience’ at best or a desperate compromise at worst. But we found that using this format provided several methodological advantages over a traditional in-person style.

Firstly, asynchronous methods allow the ability to reach niche participants at scale. An already niche recruit would have been that much harder to fill had we been bound by locality or visiting schedules, which are side-stepped in a remote setup. Asynchronous methods also allow exponentially more participants with minimal additional effort. Remote forward methods also bring us into a participant’s natural settings more easily, allowing us access (albeit more limited in scope) to intimate spaces that would be difficult or impossible to see in person.

For example, we wanted to understand the physical setup of each participant’s home workspaces, and so designed an unmoderated activity where they took us on a 2-minute tour of their homes and explained what technologies in their space were important for serving their accessibility needs. While the data is perhaps more limited for each individual participant, we were able to recruit and collect rich video data from 23 participants in a hard-to-reach population within the course of a single week, a next-to-impossible task if using traditional home visits. The videos also feature homes in their ‘natural’ state, where an in-person visit may have prompted participants to clean up or otherwise alter their spaces in

Figure 3. A participant gives a tour of his working space.

Additionally, the largely written and unmoderated format of communication between researcher and participant made it so that much of the study was easily completed regardless of hearing accessibility needs. We also learned through our study that mobile phones are more readily paired with many accessibility devices. Dscout offers omnichannel support, meaning we could have run this on a desktop computer; we were lucky to stumble into the more accessible option.

Considerations and Concerns with Remote Design

Although remote technology offers certain benefits, digital tools have their own challenges that need accommodation. First, we needed to build in extra time for the signed videos collected in the diary study to be translated and returned to us before we could analyze them. Second, the automatic speech recognition (ASR) engines were less accurate for some of our participants who had deaf accents and needed to be hand-corrected before they were useful for analysis (Table 3).

Table 3. ASR v. hand-corrected transcription

| ASR Transcription | Hand-corrected Transcription |

|---|---|

| “Now, the Brahman would not have done what I’m doing. You do know I have to be wounded. Careful not to put the phone too hard. Ah, the way. Oh. And I am warning my swallow only. Whoa! And my hearing no more. I have to tell you, call your mole. My hearing a. Was he a my nephew? Go, go. Da da da. Where do you put the sea? Because Petar don’t want to be broke. Never have a normal here.We just like everyone know that we’re here. Yeah, but you see the good. The car that was. Yo, yo, yo. Ah! What is she doing? A better hope mug. I came for Quinn, though, demolishing the wood. Don’t the turbo the total coil commodity. I will not be booking toy gaming new it communicate with my friend the family.” | “Now the problem with that is when I’m using it on the computer, I have to be really careful not to pull at the cord of the headphones too hard otherwise the headphone breaks, and I’m pretty much royally screwed. And because of my hearing loss, I have to use the telecoil mode on my hearing aid, which you can see here on my left ear. So because of that – that is really frustrating because I want to be able to live and have normal hearing just like everyone else that doesn’t wear hearing aids. But you see, this is the card I was dealt at birth…Without the headphones and the telecoil neckloop technology, I would not be able to enjoy gaming, music, communicating with my friends and family.” |

Additionally, not every platform is prepared to accommodate complex accessibility needs. For example, at the time of the study, dscout Live (dscout’s moderated research tool) had some barriers to inclusive research that needed to be worked around. First was the lack of captions. As discussed in our findings section, captioning via ASR – while far from perfect – is considered a crucial accessibility need by many DHH people. Second, the Live product did not have a third video stream option. Three of our scouts used ASL to communicate and required a live interpreter. Without a third video stream, this essential accessibility service could not be provided. And in a remote setting, there was no workaround in dscout for these issues.

To address these issues, we worked closely with an inclusive communications service provider to adapt our approach. We still used dscout Live for our recruitment, scheduling, and payment processes, but for the interviews themselves we used Zoom. Zoom is not a purpose-built research tool, and as such required some extra steps on the backend to prepare for analysis. However, it had the accessibility features that we required for this project. ASL interpreters and a CART transcriber were hired through the service for users who needed these accommodations. Feedback about dscout’s barriers in the Live tool was delivered to the product team, which are now being addressed (see “Moving Forward” section).

Data Collection

Collecting Asynchronous Data

One of our key considerations when designing our mobile ethnographic study was that of collecting asynchronous qualitative data. Dscout as a platform was built around collecting video data from participants. We knew from experience that videos are highly valuable tools for building empathy among stakeholders, as well as collecting more in-depth answers than open-ended that text responses normally provide. However, since we had recruited some participants who don’t vocalize, recording video could come as a challenge, especially since mobile phones need to be held or propped up with one hand while taking selfie-style videos. The question became, how do we accommodate their language (ASL) while still collecting as rich of data as possible?

We experimented with two different answers to this question. In our screening process we made video optional in the screening process, allowing participants to opt out and write their answers instead. As a result, we saw a significant difference in both quantity and quality of information gathered in the written responses versus the spoken / signed responses. In the diary study itself, we opted to make the videos non-optional. We accommodated this choice by enlisting the services of an ASL interpretive service and including language early and often that signing was encouraged if it was our participant’s main or preferred method of communication. We built in extra time after the Diary study closed to have these videos transcribed, captioned, and voiced over. The captions were re-uploaded into dscout’s video viewer for analytical reference.

The videos we have are powerful tools to demonstrate our findings and are considered especially valuable for emphasizing a user’s individuality. These were developed into curated reels and incorporated into internal deliverables for Lenovo stakeholders. However, some participants did encounter some unexpected difficulties with dscout’s video recording software. Outside of the aforementioned difficulties of taking video while signing, there was an added issue wherein dscout measured “quality” of video response by how much was spoken. For signing scouts, this meant that some videos without sound were read by the platform as an error and prompted a re-upload where none was actually necessary. This feature was an attempt to make the platform more convenient for researchers by reducing video upload error rates, but ultimately didn’t take into account the non-audio use case.

Collecting Synchronous Data: Working with Interpreters

The concerns of collecting synchronous qualitative data feel more analogous to in-person accessibility concerns. Mainly, our question was, how to respectfully and effectively communicate in real-time with participants who don’t vocalize? To prepare, we took steps to educate ourselves about best practices for working with ASL interpreters and the pain points that so many DHH people experience with video conferencing (Kushalnagar and Volger 2020). As a result, we made important adjustments to how we planned and conducted these interviews, which included:

- Turning on the closed captions in Zoom before the video interview began. Zoom’s default is to not show captions, so before each interview, we enabled closed captions so that all interview participants did not have to specifically request it (a thoughtful inclusive practice, even in daily life for online meetings).

- Labeling the interpreter or transcriber in Zoom (also called “renaming”) before the session begins, to indicate their identity for the interview participant.

- Providing the ASL interpreter and CART transcriptionist with our moderator guide/interview questions to preview several days before the sessions.

- Allowing a few minutes before starting the interview for the signing person to communicate with the ASL interpreter about their signing style, rhythm, and the like, to allow for smoother interpretation.

- Looking at and speaking directly to the person who is signing, and not at the interpreter.

- Allowing for pauses and a few seconds delay when working with an interpreter. It’s tempting to interrupt the interpreter if you do not focus on the DHH participant and fail to notice that they are signing while you speak.

Moving Forward

Both dscout and Lenovo learned a lot in this research process. We as researchers intend to take these learnings forward in our organizations. The results were shared out internally at Lenovo, and designers are working to innovate and incorporate these findings into future planning. However, the more immediate impact has been based on our learnings for inclusive research design, which are manifesting in several ways at both organizations.

Improved Platform Accessibility

After the project’s conclusion, we collaborated with dscout’s Product Researchers to collect feedback from our participants about using the app. Between the feedback we collected and the learnings from this mission, we were able to build a business case for several key product improvements:

- Videos without sound were occasionally being erroneously flagged as ‘errors’, which others signed responses; this has been flagged as a bug and is currently being corrected.

- Dscout Live was not an option for this project due to the lack of live transcription software. Dscout has taken this to heart and will start rolling out live automatic captioning into dscout Live starting October 19, 2022.

- Plans are also being made for a multi-moderator mode of dscout Live. This will allow more than one “researcher” to be present at a time; while this has many use cases in the research world, it will notably allow for researchers to be on-call with interpreters or translators.

Combined, these features will eliminate the biggest barriers for use among DHH users in the current iteration of our platform

Inclusive Design Best Practices

Both Lenovo and dscout are working on crystallizing their learnings and sharing them with the wider research practices within their organizations. These concrete best practices currently include:

- Allocating budget for transcription and translation services, to allow for signing respondents to participate fully in the project;

- Offering multiple means of responding to key questions, including speech, signing, or writing, depending on the needs of participants and researchers;

- Building in time to do advance desk research on key demographics and demonstrating understandings to participants;

- Asking participants, no matter what study’s focus, whether they need accommodations to fully participate in the study at hand.

We fully believe, and are intent on communicating to our organizations, that these best practices are crucial for running research on accessibility. But in addition, the “curb cut effect” also applies to research; these learnings will not only improve accessibility research but will make all research design more flexible and respectful for participants.

CONCLUSION: NOTHING ABOUT US WITHOUT US

“Nothing about us without us” is a phrase that has come to signify the disability rights movement, and as disability rights activist James Charlton has written, “expresses the conviction of people with disabilities that they know what is best for them” (Charlton 2000). Lenovo and dscout recognize that asking customers with disabilities about their experiences, and doing so thoughtfully, is essential to developing more accessible products. This, in turn, can impact far more people than we might imagine, resulting in better experiences for everyone. Including individuals with disabilities at the user research table and designing research that allows them to participate in ways that are best for them, has given us the opportunity to better understand the role technology plays in their day-to-day lives. The disability community has historically been left out of these conversations, and some users in our study acknowledged this and expressed appreciation for being included:

“I think it’s worthwhile what you all are doing because I’ve not had anybody ever ask me about how it is to live as a person that’s hard of hearing…And it’s a significant handicap to have a hearing loss because to look at you, you wouldn’t know. But to just have somebody take an interest in that segment of the population, I think is worthwhile. So, thank you.”

– She/her, 40, moderate hearing loss

Hearing the unique perspectives of users with disabilities also puts in stark relief the power we have as tech companies to promote equity and inclusion on a larger cultural level through product design and brand purpose. When one participant with severe hearing loss discussed the kinds of assistive technologies he relies on, he added, “I also rely on human understanding, empathy, [and] compassion so that technology designers and developers create inclusive products that make me feel like an equal member of society.”

The participants in our study discussed the numerous tech obstacles they experience each day, as well as how they adjust and practice resilience when experiencing those challenges. The burden of finding workarounds and adapting falls on many disabled individuals, who must make extra efforts to navigate a world that is not, as several of our participants noted, made for them. However, if we are researching and designing to include their perspectives from the ground up, then ideally, individuals with disabilities would not have to spend time and energy trying to find ways to make their products work for them. The onus should shift to businesses and organizations to adapt and be resilient in their product design. Taking these steps has the potential to add great value to the lives of our customers. Embracing this responsibility of corporate citizenship can contribute to improving accessibility and inclusion for all users.

Dana C. Gierdowski is a Senior Manager for Lenovo’s User Experience Design Research team, where she leads initiatives and supports cross-functional hardware, software, and emerging projects. She is an experienced qualitative researcher and an accessibility ally. Her passion for accessibility stems from her experience as a teacher and education researcher.

Karen Eisenhauer is dscout’s Original Research Lead. She works with organizations across industries to produce innovative original research and showcase research best practices using dscout’s suite of tools. She’s also a contributor to dscout’s industry leading publication, People Nerds, where she regularly writes on issues of ethical and accessible research practices.

Peggy He works as a User Experience researcher at Lenovo. With an academic background in Human-Centered Design & Engineering and Decision Science, she is passionate about observing, analyzing, and understanding human behaviors and underlying needs. With more exposure to accessibility research in her work, she hopes to help make designs more inclusive.

NOTES

Acknowledgments – Thank you to our employers for their support of and commitment to accessibility research and inclusive product design. We are especially grateful to our research participants who took the time to share their lived experiences with us. Thank you for your grace and patience as we continue our accessibility journey. We are better researchers for having learned from you.

LENOVO is a trademark of Lenovo. All product names, logos, brands, trademarks, and registered trademarks are property of their respective owners. All company, product and service names used in this case study are for identification purposes only. Use of these names, trademarks, and brands does not imply endorsement.

1. We use a capital “D” in “Deaf” to denote individuals who have self-identified as culturally Deaf and self-identify as members of the Deaf community. The use of a lowercase “d” in “deaf” refers to individuals who do not self-identify as culturally Deaf or part of the Deaf community. We also use the lowercase “d” in “deaf” when characterizing one’s audiological status/condition.

2. We asked participants to self-identify into different levels of hearing loss based on the following definitions:

- Mild hearing loss: Mild hearing loss: difficulty understanding normal speech, especially with background noises (e.g., Conversations are easier to hear without background noises, such as TV or radio)

- Moderate hearing loss: difficulty understanding most normal speech even with no background noises (e.g., Conversations and TV volumes may become louder even when there is no background noise, so they’re easier to hear)

- Severe hearing loss: difficulty understanding even loud speech and will not perceive most noises (e.g., Sounds such as airplanes and lawnmowers can be more challenging to ear without amplification or an assistive listening device)

- Profound hearing loss: cannot perceive even loud speech and noises (e.g., Louder decibel sounds such as sirens may be perceived as vibrations instead of sound)

- Other (please specify)

3. Captioning is available in the Zoom platform; however, it must first be enabled by the meeting organizer.

REFERENCES

Blackwell, Angela G., 2017. The Curb-Cut Effect (SSIR). [online] Ssir.org. Accessed July 1, 2022. https://ssir.org/articles/entry/the_curb_cut_effect.

Charlton, James I., 2000. Nothing About Us Without Us. Oakland: University of California Press.

Lenovo Group Limited. “2021/22 Environmental, Social and Governance Report.” Accessed August 26, 2022. https://investor.lenovo.com/en/sustainability/reports/FY2022-lenovo-sustainability-report.pdf.

Lu, Thomas and Sylvie Douglis. 2022. “Don’t be scared to talk about disabilities. Here’s what to know and what to say.” (NPR). [online]. Npr.org. Accessed July 1, 2022. https://www.npr.org/2022/02/18/1081713756/disability-disabled-people-offensive-better-word.

Kushalnagar, Raja S. and Christian Volger. 2020. “Teleconference Accessibility and Guidelines for Deaf and Hard of Hearing Users.” Presented at ASSETS ’20, October 26–28, 2020, Virtual Event, Greece.

National Institute on Deafness and Other Communication Disorders. 2021. “Quick Statistics About Hearing. Accessed July 11, 2022. https://www.nidcd.nih.gov/health/statistics/quick-statistics-hearing.

Qiao, Gina and Yolanda Conyers. 2005. The Lenovo Way: Managing a Diverse Global Company for Optimal Performance. New York: McGraw Hill.

World Health Organization. 2021. “Health Topics: Deafness and hearing loss.” Accessed February 16, 2022. https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss.